1. Introduction

There is no clear definition of artificial

intelligence (AI), but it is generally considered to be a computer

system with the functions of human intelligence, such as learning,

inference, and judgment (1,2). Machine learning refers to the

technology by which computers learn large amounts of data and

automatically build algorithms and models to perform tasks, such as

classification and prediction (1,2). The

field of AI has evolved with the development of deep learning.

Neural networks are mathematical models that have properties

similar to those of brain functions, and deep learning is a

machine-learning technique that enhances expression and learning

ability by combining neural networks in multiple layers (3,4). Image

recognition is an ability developed through deep learning, which is

used for face recognition, automated driving, and other tasks

(5). The combination of robotics

and AI also has numerous benefits in daily life. When a robot is

equipped with AI, it functions as a machine that senses its

surroundings and determines how to act. AI is also being introduced

into the medical field; since it excels in image recognition,

previous studies have reported the use of X-ray, CT, ultrasonic,

and other images for the diagnosis of diseases through deep

learning (6,7). In addition, these studies describe the

use of deep learning to diagnose diseases and predict patient

prognosis based on information in medical records (6,7).

The use of minimally invasive surgery is now

widespread in most surgical fields. Among minimally invasive

surgery approaches, indications for robot-assisted surgery systems

such as da Vinci have recently been expanded (8).

In recent years, the number of studies on the use of

deep learning to analyze surgical videos and apply them to medical

care has increased (9,10), and a growing number of studies on

the development of autonomous surgical robots have been published

(11,12).

The aim of the present review was to examine the

possibility of fully autonomous surgical robots in the future.

First, studies on the analysis of surgical videos for laparoscopic

surgery and robot-assisted surgery using deep learning were

described. Subsequently, studies on the development of autonomous

surgical robots using AI were presented.

2. Analysis of laparoscopic surgery video

using deep learning

One important process in the development of

autonomous surgical robots is the recognition of surgical details.

A number of studies have used deep learning for laparoscopic

surgery and robot-assisted surgery (9,10).

Different aspects are described in the following sections: i) Organ

and instrument identification, ii) procedure and surgical phase

recognition, iii) safe surgical navigation, and iv) surgical

education (Table I).

| Table IPrevious studies on surgical-related

artificial intelligence analysis. |

Table I

Previous studies on surgical-related

artificial intelligence analysis.

| Authors | Year | Procedure | Dataset | No. | Application | Performance

score | (Refs.) |

|---|

| Zadeh et

al | 2020 | Gynecologic

surgery | Mask R-CNN | 461 images | Organ

identification | Accuracy: 29.6%

(ovary) and 84.5% (uterus) | (10) |

| Mascagni et

al | 2022 | Laparoscopic

cholecystectomy | CNN | 2,854 images | Organ

identification | Average accuracy:

71.9% | (13) |

| Padovan et

al | 2022 | Urologic

surgery | Segmen- tation

CNN | 971 images | Organ

identification | IoU: 0.8067

(prostate) and 0.9069 (kidney) | (14) |

| Koo et

al | 2022 | Liver surgery | CNN | 133 videos | Organ

identification | Precision:

0.70-0.82 | (15) |

| Namazi et

al | 2022 | Laparoscopic

cholecystectomy | Recurrent CNN | 15 videos | Instrument

identification | Mean precision:

0.59 | (16) |

| Yamazaki et

al | 2022 | Laparoscopic

gastrectomy | CNN | 19,000 images | Instrument

identification | N.A. | (17) |

| Aspart et

al | 2022 | Laparoscopic

cholecystectomy | CNN | 122,470 images | Instrument

identification | AUROC: 0.9107;

specificity 66.15%; and sensitivity: 95% | (18) |

| Cheng et

al | 2022 | Laparoscopic

cholecystectomy | CNN | 156,584 images | Surgical phase

recognition | Accuracy: 91% | (19) |

| Kitaguchi et

al | 2022 | Transanal total

mesorectal excision | CNN | 42 images | Surgical phase

recognition | Accuracy:

93.2% | (20) |

| Kitaguchi et

al | 2020 | Laparoscopic

sigmoid colon resection | CNN | 71 cases | Surgical phase

recognition | Accuracy:

91.9% | (21) |

| Twinanda et

al | 2019 | Cholecystectomy and

gastric bypass | CNN and LSTM

network | 290 cases | Surgical time

prediction | N.A. | (23) |

| Bodenstedt et

al | 2019 | Laparoscopic

interventions of various types | Recurrent CNN | 3,800 frames | Surgical time

prediction | Overall average

error: 37% | (24) |

| Igaki et

al | 2022 | Total mesorectal

excision | CNN | 600 images | Safe surgical

navigation | Dice coefficient:

0.84 | (25) |

| Kumazu et

al | 2021 | Robot-assisted

gastrectomy | CNN | 630 images | Safe surgical

navigation | N.A. | (26) |

| Moglia et

al | 2022 | Virtual simulator

for robot-assisted surgery | CNN | 176 medical

students | Surgical

education | Accuracy:

>80% | (27) |

| Zheng et

al | 2022 | Box trainer for

laparoscopic surgery | Long-/ short-term

memory recurrent neural network | 30 medical

students | Surgical

education | Accuracy:

74.96% | (28) |

Organ and instrument

identification

Previous research has described the identification

of organs and anatomical structures in the analysis of laparoscopic

images using deep learning. Zadeh et al manually annotated

the uterus and ovaries on 461 gynecological laparoscopic videos.

Mask Regional Convolutional Neuronal Network (Mask R-CNN), a

deep-learning method, was used to identify the datasets and

automatically segment the uterus, ovaries, and surgical

instruments. Segmentation accuracy was examined as the percentage

overlap between the segmented area of the manual annotation and

that of the Mask R-CNN. The accuracy values were 29.6, 84.5 and

54.5% for the ovary, uterus, and surgical instruments, respectively

(10). The segmentation results for

the ovaries were not as satisfactory as those for the uterus and

instruments. This can be due to the following two main reasons.

First, the training dataset contained a lower number of ovary

instances because this organ is often hidden by other structures

(the fallopian tube or uterus). Second, the ovaries often present

with a highly varying appearance across patients (10). There are no other studies on the

recognition of ovaries in surgical videos using deep learning. The

shape of the uterus also varies across patients; therefore, the

shapes of ovaries are not considered to be the only reason for the

low rate of correct diagnosis. In addition, it is considered that

the rate of correct diagnosis may be improved if the annotation is

performed well and the number of ovaries in the training dataset is

increased.

Mascagni et al (13) developed a deep-learning model to

automatically segment the liver and gall bladder in laparoscopic

images and evaluate safety criteria for laparoscopic

cholecystectomy (LC). Developments in the surgical field method

prevent surgical complications. The specific surgical field

deployment for safe LC is known as the critical view of safety

(CVS). There are three points to consider when developing CVS: i)

Fat and fibrous tissue must be removed from Calot's triangle, ii)

the neck of the gallbladder must be removed from the gallbladder

plate, and iii) ensure that only the cholecystic duct and

cholecystic artery are connected to the gallbladder. In this study,

2,854 images from 201 LC videos were annotated and 402 images were

segmented. A deep neural network was developed consisting of a

segmentation model to highlight the anatomy of the liver sac, and

the average accuracy of the classification model to predict the

achievement of CVS criteria was 71.9% (13). By increasing the rate of correct

diagnosis of this system in the future, the CVS may be determined

by this model before proceeding with surgery.

Padovan et al used deep learning from

laparoscopic surgery videos to construct 3D models that accounted

for position and rotation. They confirmed the accuracy by

superimposing the constructed data on actual organ images, and the

accuracy was >80% in all tests (14). The initial registration of a 3D

preoperative CT model to 2D laparoscopic images in an augmented

reality system for liver surgery may be useful for surgeons to

better identify the internal anatomy. This study aimed to develop a

system that automates this process using deep learning instead of

the conventional manual method. Specifically, the system automates

the construction of a 3D model, the identification of the hepatic

limbus in CT images, and the identification of the hepatic limbus

in 2D laparoscopic video images (15). Thus, the integration of laparoscopic

video and 3D CT may be useful for preoperative simulation in the

future.

In the analysis of laparoscopic surgery videos using

deep learning, the identification of surgical instruments is

equally important to the identification of organs. Therefore,

several studies on surgical tool identification have been reported.

For example, Namazi et al developed an AI model called

LapToolNet to detect surgical instruments, such as bipolar,

clipper, grasper, hook, irrigator, scissors, and specimen bag, in

each frame of a laparoscopic video, all with agreement rates of 80%

or higher (16). Several studies

have dealt with surgical instrument recognition in this way, but

the results are often not very different from those of organ

recognition (16,17). In another study, the patterns of

instrumentation use were compared among surgeons with different

skill levels during laparoscopic gastrectomy for gastric cancer. A

total of 33 cases of D2 suprapancreatic lymphadenectomy for gastric

cancer were evaluated in this retrospective study. Patterns of

surgical device use were compared between surgeons certified under

the Endoscopic Surgical Techniques Certification System of the

Japanese Society for Endoscopic Surgery and unqualified surgeons.

The percentage of time spent using incision forceps and clip

appliers was higher among non-technically-certified surgeons than

among technically-certified surgeons. For suprapancreatic

lymphadenectomy, the percentage of time spent using energy

instruments, clip appliers, and grasping tweezers was significantly

different between the two groups (17).

One of the key techniques in LC is clipping the

cholecystic artery before cutting. For safe clipping, it is

important to have full visibility of the clipper while surrounding

the artery and biliary duct with the clip applier jaws. Using

videos of 300 cholecystectomies, Aspart et al developed a

deep-learning model that provides real-time feedback on the proper

visibility of the clip applier (18). Notably, the study demonstrated the

difference in skills between skilled and unskilled surgeons by

means of deep learning. Such analysis can be used as an educational

tool to improve surgical skills by identifying the so-called ‘good

surgeries’ in the future.

Procedure and surgical phase recognition.

Recognition of the surgical technique and stage is important, as is

the dissection and identification of surgical instruments. Cheng

et al used deep learning to recognize and analyze the

surgical steps in multiple LC surgical videos. In this study, 163

LC videos sourced from four medical centers were evaluated. The

accuracy of the developed model in recognizing the surgical steps

was 91% (19). Breaking down the

surgical procedure into steps facilitates the acquisition, storage,

and organization of intraoperative video data. However, manual data

organization is time-consuming; therefore, Kitaguchi et al

developed a model using deep learning to automatically segment the

surgical steps of the transanal total mesorectal excision

procedure, achieving an overall accuracy of 93.2% (20). In another study, Kitaguchi et

al developed a model that uses CNN-based deep learning to

recognize the steps and procedures of a laparoscopic sigmoid colon

resection based on manually annotated data. The surgical steps were

classified into 11 procedures, and the accuracy of their

recognition was 91.9% (21).

Indocyanine green is sometimes used to investigate blood return

after bowel resection and bowel anastomosis in deep endometriosis.

In another study, a prediction model of blood return after bowel

anastomosis was developed by analyzing images of the bowel after

indocyanine green injection using deep learning (22). The course of a surgical procedure is

often not predictable, and it is difficult to evaluate the time of

the procedure in advance, which renders the scheduling of surgical

procedures difficult. Therefore, surgical time prediction is also

an important factor in terms of surgical recognition. Fewer studies

have been reported on surgical time prediction using AI than

others. Twinanda et al developed a deep-learning pipeline

called RSDNet that automatically predicts the remaining

intraoperative surgical time (RSD) using only visual information

from laparoscopic images. A key feature of RSDNet is that it can

easily accommodate various types of surgeries without relying on

manual annotation during training (23).

Bodenstedt et al developed a convolutional

neural network-based method for continuous prediction of

laparoscopic surgery time based on endoscopic images. Various types

of laparoscopic images were used, and these methods were evaluated.

The results showed an overall average error of 37% and an average

half-time error of approximately 28% (24). Recognition of surgical steps

provides evidence of the capture of changes in higher-order

features, such as surgical procedures, which will lead to the

development of surgical navigation systems and autonomous surgical

robots in the future.

Surface navigation for safe incisions. One of

the most important factors in surgery is making the incision in a

safe area, which can be difficult depending on the skill level of

the individual surgeon. Thus, several studies on the development of

surface navigation systems for safe incisions using AI have been

reported.

Igaki et al developed an AI-based navigation

of the entire mesorectal resection plane in laparoscopic colorectal

surgery. A total of 32 videos of laparoscopic left colorectal

resections were analyzed using deep learning. The developed model

helped identify and highlight the target area, however more images

are required to improve the accuracy (25). Kumazu et al defined the

surgical surface for safe incision as a loose connective tissue

fiber (LCTF) and developed a model that automatically segments the

LCTF. A surgical video of a robot-assisted gastrectomy was created

using U-NET-based deep learning, and the segmentation results were

output. The answers to two questions were then obtained from 20

surgeons with regard to the segmentation results including i) is

this AI highly sensitive in recognizing LCTF (on a 5-point scale)?

and ii) how many frames does the AI misrecognize? The mean value

for question 1 was 3.52, and the mean value for question 2 was

0.14. This suggests that AI can be used to recognize difficult

anatomical structures and assist surgeons in surgery (26). Regarding this study, it is

considered that the present model can be used for gastrectomy as

well as other surgeries if developed in the future. However, while

LCTF may lead to the recognition of a safe incision line, it may

not necessarily lead to the effective navigation of an appropriate

incision line, and it is surmised that resolving this issue is a

future challenge. In the future, it is important to develop such a

navigation system, and it is maintained that the development of

such a system will lead to the development of automated surgical

robots.

Surgical education. Deep learning has also

been applied to the field of surgical education. Moglia et

al used deep learning to develop a model to predict the

proficiency of medical students in a surgical simulator based on

their training data. Subsequently, they used the model to predict

proficiency from simulator data of untrained medical students with

an accuracy rate of >80% (27).

Excessive stress experienced by surgeons can negatively affect

their surgical procedures, and Zheng et al developed a

deep-learning model to detect, in real time, the movements of

surgical procedures in which surgeons appear to be under stress. In

this study, stress-sensitive procedures were identified, which may

be integrated into robotic-assisted surgical platforms and used for

stress management in the future (28). Future development of such a system

may render it possible to use deep learning in surgical

education.

3. Autonomous surgical robots

Description of autonomy

Autonomy is defined as being independent and capable

of making decisions; however, its definition is ambiguous and

without standards, and it is often used inappropriately. For

example, the da Vinci system, which is currently used in numerous

hospitals, is misnamed a surgical robot, even though it is,

narrowly defined, as a high-tech motion repeater manipulator. This

designation of the term ‘robot’ is rather incorrect, but

unfortunately, the name has stuck. Therefore, when developing an

autonomous surgical system, it is better to define the term

‘autonomy’ to avoid misnomers (11). Han et al and Yang et

al proposed six frameworks describing the levels of autonomy

for medical robots, similar to that for autonomous vehicles. Level

0, which is the no autonomy group, includes surgical robots with

motion scaling capabilities that respond to the commands of the

surgeon. Level 1, or the robotic assistance group, includes robots

that provide some assistance while humans predominantly manage the

system. Level 2, which is the task autonomy group, includes robots

that autonomously perform a specific task that is started by a

human. A feature that differs from that of Level 1 is that the

operator controls the system discretely rather than continuously.

An example is an automated suturing system in a surgical procedure.

The surgeon instructs the robot where to suture, and the robot

performs the task autonomously, with the surgeon monitoring and

intervening as needed. Level 3, which is the conditional autonomy

group, includes robots that can perform system-generated tasks but

rely on humans to select among different plans. This surgical robot

can perform tasks without close supervision. Level 4, or the high

autonomy group, includes robots that can make decisions in surgery,

but it is only allowed to do so under the supervision of a

qualified physician. Level 5, or the full autonomy group, includes

robots that do not require a human at all. This is a ‘robotic

surgeon’ who can perform the entire surgery (12,29).

Two additional factors are important for autonomous surgery:

Recognition and task. The classification level of recognition is as

follows: Level 1, awareness of the environment; Level 2,

understanding the current status; and Level 3, prediction of the

future status. The classification level of tasks, or Level of Task

Complexity (LoTC), is as follows: LoTC 1, simple training tasks

that are limited to surgical tasks such as distance considerations;

LoTC 2, high training tasks; LoTC 3, simple surgical tasks; LoTC 4,

advanced surgical tasks, such as suturing; and LoTC 5, complex

surgical tasks, such as stopping sudden bleeding (30). The development of autonomous

surgical robots should be aimed at accounting for the

abovementioned classification levels.

Autonomous (or semi-automatic) surgical robots

developed to date. In this section, the autonomous (or

semi-autonomous) surgical robots that have been developed thus far

are introduced. Despite the increasing adoption of robot-assisted

surgery, surgical procedures on soft tissues are still performed

completely manually by human surgeons. Shademan et al have

developed a supervised surgical robot called Smart Tissue

Autonomous Robot (STAR), which is a monitored surgical robot that

can perform complex surgical procedures that could previously only

be performed by humans (31).

The first surgical techniques STAR aimed to perform

were anastomosis and suture. These techniques are important because

suturing soft organs (such as the intestinal tract, urinary system,

and gynecological vaginal segments) is a common surgical procedure

that requires repeatability, accuracy, and efficiency and thus

supports the development of autonomous surgical robots. Autonomous

robotic surgery offers benefits in terms of efficacy, safety, and

reproducibility, regardless of the skill and experience of the

individual surgeon. In this context, autonomous anastomosis is

challenging because it requires complex imaging, navigation, and

highly adaptable and precise execution. As reported in 2016, STAR

performed intestinal anastomosis in open surgery in pigs. This STAR

phase was characterized by the following two points: i) A 3D visual

tracking system using near-infrared fluorescence imaging and ii) an

automated suture algorithm. Suture consistency, anastomotic leak

pressure, number of mistakes, and completion time were compared

with those of robot-assisted surgery and manual laparoscopic

surgery, and STAR was superior (31). Since then, STAR has undergone a

number of improvements. For example, an autonomous 3D path planning

system was developed for STAR that utilizes biocompatible

near-infrared markings and aims at precise incisions in complex 3D

soft tissues. This plan was able to reduce the incision progress

error compared to previous autonomous path planning (32). Other improvements include 3D imaging

endoscopy and the development of a laparoscopic suture tool to

generate a suture planning strategy for automated anastomosis. This

tool was 2.9 times more accurate than manual suturing (33). Anastomosis performed by an

autonomous robot may improve surgical outcomes by ensuring more

accurate suture spacing and suture size than manual anastomosis.

However, it is difficult for those robots without features such as

continuous tissue detection and 3D path planning, because soft

tissues have irregular shapes and unpredictable deformations.

Therefore, Kam et al developed a new 3D path planning

strategy for STAR that allows semi-autonomous robotic anastomosis

in deformable tissues. A comparison was performed between STAR

using the completed algorithm and a surgeon-completed anastomosis

of synthetic vaginal cuff tissue. The results revealed that STAR

with the newly developed method achieved 2.6 times better

consistency in suture spacing and 2.4 times better consistency in

suture bite size than manual performance (34). STAR was also used to create and

analyze shared control strategies for human-robot collaboration in

surgical scenarios. Specifically, a shared control strategy was

developed based on trust, and the accuracy of the developed

strategy was analyzed by evaluating the pattern tracking

performance, both autonomous and manual. In an experiment with pig

fat samples, by combining the advantages of autonomous robot

control with complementary human skills, the control strategy

improved cutting accuracy by 6.4% while reducing operator work time

by 44% compared to manual control (35).

The latest study by STAR describes a novel in

vivo autonomous robotic laparoscopic surgical technique. The

autonomous system developed in this study is characterized by its

ability to track tissue position and deformation, interact with

humans, and execute complex surgical plans (36). Owing to this improved autonomous

strategy, the operator can select among surgical plans generated by

this system, and the robot can perform various tasks independently.

Furthermore, using the enhanced autonomous strategy, needle

placement compensation, suture spacing, completion time, and the

rate of intestinal suture failure were compared with those of

skilled surgeons and robot-assisted surgical techniques, and the

developed STAR outperformed both. Another unique feature reported

by this study was the ability to perform subperitoneal surgery

(36). However, the issue remains

as to whether STAR can also automate incision and hemostasis, as it

has only been developed for suturing in the past.

Another reported autonomous surgical robot is the

RAVEN-II system. The features of the RAVEN-II system are as

follows: i) Provides software and hardware to support research and

development of surgical robots; ii) provides advanced robotics such

as computer vision, motion planning, and machine learning; iii)

establishes a software environment compatible with functions; and

iv) provides a hardware platform that allows for solid evaluation

of experiments (37). Using the

RAVEN-II system, a prototype medical robotic system designed to

autonomously detect and remove residual brain tumors after the

majority of the tumor has been removed by conventional surgery has

been developed. The scenario assumes that the majority of the brain

tumor has been removed, and then the cavity, the size of a

ping-pong ball is exposed. The system is equipped with a multimodal

scanning fiber endoscope, a suction machine for blood removal, and

multiple robotic arms with devices for brain tissue resection. The

automated surgical procedure is performed in six subtasks: i)

Medical image acquisition, which involves scanning of the surgical

cavity after tumor removal; ii) medical image processing, which

involves 3D construction of the aforementioned surgical cavity and

recognition of the residual tumor; iii) ablation plan creation; iv)

selection of the plan by the surgeon; v) performance of the plan by

the robot; and vi) verification of the ablation results (38).

Another experimental system is the da Vinci Research

Kit (dVRK). The dVRK is a joint industry-academia effort to

repurpose the obsolete da Vinci system (Intuitive Surgical, Inc.)

as a research platform to promote surgical robotics research

(https://research.intusurg.com/index.php/Main_Page).

This is important to facilitate the entry of new research groups

into the field of surgical robotics. For example, in the master

tool manipulator of the dVRK, hysteresis forces from the electrical

cables of the robotic joints often prevent accurate parameter

estimation in gravity-compensation models due to the magnitude of

gravity and identification. Therefore, a strategy to classify these

two hybrid forces and evaluate them in individual learning-based

algorithms has been proposed. A specially designed Elastic

Hysteresis Neural Network model was employed to capture the

external hysteresis. The gravity compensation method developed in

this study for the master tool manipulator of the dVRK exhibits

improvement over the previous one (39).

Ethics. Although it will still require a long

time before the development of a fully autonomous surgical system,

the development of a hybrid system may be realized in the near

future. Therefore, it is necessary to discuss ethical issues

related to autonomous surgical systems (27).

One of the most controversial ethical issues to be

discussed is responsibility. In a three-part sequence of

operations, including input, internal state (deep learning

algorithm), and output, full transparency is not ensured. This is

especially true for the algorithm, owing to the ‘black box’ design

inherent in deep-learning systems and the non-disclosure of source

code for reasons of copyright law and protection of trade secrets

(12,27). This prevents physicians and patients

from trusting robots. Another concern is responsibility for the

system used. Is it the developer or the physician using the system

who is responsible? For example, who can be held responsible for

surgical complications caused by an autonomous surgical robot? Who

is guilty and who should be punished if an anomaly occurs, such as

loss of communication during an aggravated teleoperation? One

proposed solution regarding liability is that, as in the case of

autonomous vehicles, the surgeon stays in the same room as the

surgical robot, which can be controlled by the physician at any

time. Another option is to use a limited system that does not give

full autonomy but assists the surgeon in routine surgery (12,27).

Discussion of ethical and other regulations should be completed by

the time an autonomous surgical robot is developed that is safe and

has more consistent performance than a human surgeon.

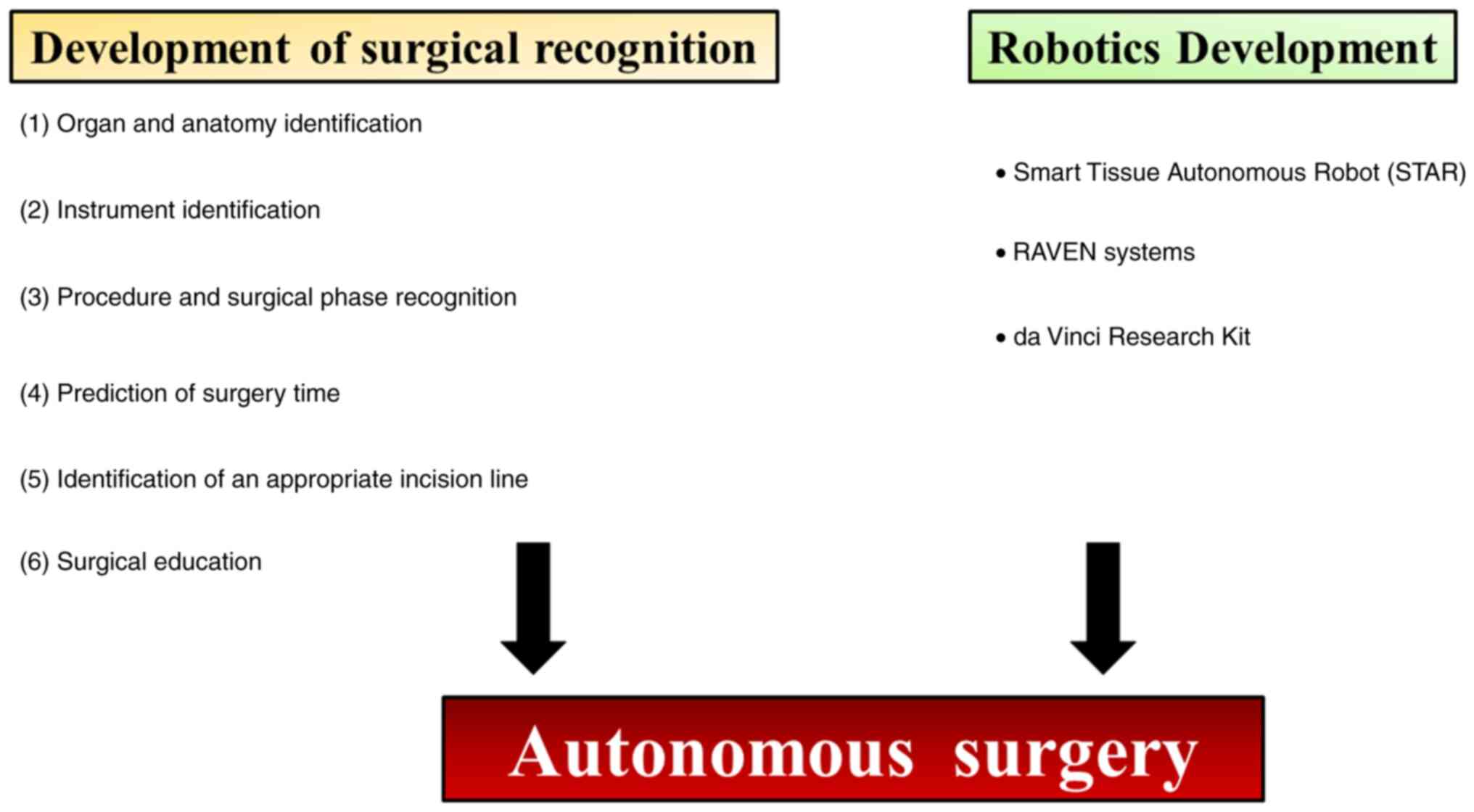

4. Conclusion

Although the clinical applications of fully

autonomous surgical robots may yet take some time, partially

autonomous surgical robots may see practical applications in the

not-too-distant future. For this purpose, it is necessary to

advance surgical recognition and robotics through deep learning

using surgical videos (Fig. 1). In

this review, various studies on the recognition of surgical videos,

including organ recognition, surgical instrument recognition, and

surgical education were investigated. Among these tasks, it is

considered that identifying the resection site is the most

important. However, it is inferred that the accuracy of the

currently reported AI models is still far from that required for

clinical application. Strictly speaking, the ultimate goal is for

physicians to be able to perform surgery safely according to

navigation assistance provided by the AI model. To improve

diagnostic accuracy, it is necessary to use public databases of

moving images and develop programs. Furthermore, in this review,

autonomous surgical robots were described. The STAR system is the

most widely reported, and the development of automatic suturing and

anastomosis systems is progressing with further innovations. In the

future, it will be necessary to develop applications for other

surgical techniques, such as incisions. For the time being, it is

necessary to develop semi-automatic systems that can perform simple

tasks and systems with a human surgeon on standby, who can

intervene in case of an emergency. To ultimately develop an

autonomous surgical robot, it is necessary to integrate the

navigation system with the surgical robot as aforementioned.

Particularly, it is necessary to develop a deep-learning model that

can feed back the results recognized by the navigation system to

the surgical technique.

Acknowledgements

Not applicable.

Funding

Funding: No funding was received.

Availability of data and materials

Not applicable.

Authors' contributions

KS, ST, YT, AT, YM, MM, TI, OWH, and YO contributed

to literature research, as well as manuscript writing and review.

KS, ST, YT, and AT designed the figure and table. KS, MM, TI, OWH,

and YO conceptualized and supervised the study. Data authentication

is not applicable. All authors read and approved the final

manuscript.

Ethics approval and consent to

participate

Not applicable.

Patient consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing

interests.

References

|

1

|

Moor J: The Dartmouth College artificial

intelligence conference: The next fifty years. AI Mag. 27:87–89.

2006.

|

|

2

|

Russell S and Norvig P: Artificial

intelligence: A modern approach. Prentice Hall, Upper Saddle River,

NJ, 1995.

|

|

3

|

Nilsson NJ: Artificial intelligence: A new

synthesis. Morgan Kaufmann, Burlington, MA, 1998.

|

|

4

|

Shinde PP and Shah S: A review of machine

learning and deep learning applications. In: Fourth International

Conference on Computing Communication Control and Automation

(ICCUBEA). IEEE, 2018.

|

|

5

|

Emmert-Streib F, Yang Z, Feng H, Tripathi

S and Dehmer M: An introductory review of deep learning for

prediction models with big data. Front Artif Intell.

3(4)2020.PubMed/NCBI View Article : Google Scholar

|

|

6

|

Hamamoto R, Suvarna K, Yamada M, Kobayashi

K, Shinkai N, Miyake M, Takahashi M, Jinnai S, Shimoyama R, Sakai

A, et al: Application of artificial intelligence technology in

oncology: Towards the establishment of precision medicine. Cancers

(Basel). 12(3532)2020.PubMed/NCBI View Article : Google Scholar

|

|

7

|

Sone K, Toyohara Y, Taguchi A, Miyamoto Y,

Tanikawa M, Uchino-Mori M, Iriyama T, Tsuruga T and Osuga Y:

Application of artificial intelligence in gynecologic malignancies:

A review. J Obstet Gynaecol Res. 47:2577–2585. 2021.PubMed/NCBI View Article : Google Scholar

|

|

8

|

Miyamoto Y, Tanikawa M, Sone K,

Mori-Uchino M, Tsuruga T and Osuga Y: Introduction of minimally

invasive surgery for the treatment of endometrial cancer in Japan:

A review. Eur J Gynaecol Oncol. 42:10–17. 2021.

|

|

9

|

Moglia A, Georgiou K, Georgiou E, Satava

RM and Cuschieri A: A systematic review on artificial intelligence

in robot-assisted surgery Int J. Surg. 95(106151)2021.PubMed/NCBI View Article : Google Scholar

|

|

10

|

Madad Zadeh S, Francois T, Calvet L,

Chauvet P, Canis M, Bartoli A and Bourdel N: SurgAI: Deep learning

for computerized laparoscopic image understanding in gynaecology.

Surg Endosc. 34:5377–5383. 2020.PubMed/NCBI View Article : Google Scholar

|

|

11

|

Gültekin IB, Karabük E and Köse MF: ‘Hey

Siri! Perform a type 3 hysterectomy. Please watch out for the

ureter!’ What is autonomous surgery and what are the latest

developments? J Turk Ger Gynecol Assoc. 22:58–70. 2021.PubMed/NCBI View Article : Google Scholar

|

|

12

|

Han J, Davids J, Ashrafian H, Darzi A,

Elson DS and Sodergren M: A systematic review of robotic surgery:

From supervised paradigms to fully autonomous robotic approaches.

Int J Med Robot. 18(e2358)2022.PubMed/NCBI View

Article : Google Scholar

|

|

13

|

Mascagni P, Vardazaryan A, Alapatt D,

Urade T, Emre T, Fiorillo C, Pessaux P, Mutter D, Marescaux J,

Costamagna G, et al: Artificial intelligence for surgical safety:

Automatic assessment of the critical view of safety in laparoscopic

cholecystectomy using deep learning. Ann Surg. 275:955–961.

2022.PubMed/NCBI View Article : Google Scholar

|

|

14

|

Padovan E, Marullo G, Tanzi L, Piazzolla

P, Moos S, Porpiglia F and Vezzetti E: A deep learning framework

for real-time 3D model registration in robot-assisted laparoscopic

surgery. Int J Med Robot. 18(e2387)2022.PubMed/NCBI View

Article : Google Scholar

|

|

15

|

Koo B, Robu MR, Allam M, Pfeiffer M,

Thompson S, Gurusamy K, Davidson B, Speidel S, Hawkes D, Stoyanov D

and Clarkson MJ: Automatic, global registration in laparoscopic

liver surgery. Int J Comput Assist Radiol Surg. 17:167–176.

2022.PubMed/NCBI View Article : Google Scholar

|

|

16

|

Namazi B, Sankaranarayanan G and Devarajan

V: A contextual detector of surgical tools in laparoscopic videos

using deep learning. Surg Endosc. 36:679–688. 2022.PubMed/NCBI View Article : Google Scholar

|

|

17

|

Yamazaki Y, Kanaji S, Kudo T, Takiguchi G,

Urakawa N, Hasegawa H, Yamamoto M, Matsuda Y, Yamashita K, Matsuda

T, et al: Quantitative comparison of surgical device usage in

laparoscopic gastrectomy between surgeons' skill levels: An

automated analysis using a neural network. J Gastrointest Surg.

26:1006–1014. 2022.PubMed/NCBI View Article : Google Scholar

|

|

18

|

Aspart F, Bolmgren JL, Lavanchy JL, Beldi

G, Woods MS, Padoy N and Hosgor E: ClipAssistNet: Bringing

real-time safety feedback to operating rooms. Int J Comput Assist

Radiol Surg. 17:5–13. 2022.PubMed/NCBI View Article : Google Scholar

|

|

19

|

Cheng K, You J, Wu S, Chen Z, Zhou Z, Guan

J, Peng B and Wang X: Artificial intelligence-based automated

laparoscopic cholecystectomy surgical phase recognition and

analysis. Surg Endosc. 36:3160–3168. 2022.PubMed/NCBI View Article : Google Scholar

|

|

20

|

Kitaguchi D, Takeshita N, Matsuzaki H,

Hasegawa H, Igaki T, Oda T and Ito M: Deep learning-based automatic

surgical step recognition in intraoperative videos for transanal

total mesorectal excision. Surg Endosc. 36:1143–1151.

2022.PubMed/NCBI View Article : Google Scholar

|

|

21

|

Kitaguchi D, Takeshita N, Matsuzaki H,

Takano H, Owada Y, Enomoto T, Oda T, Miura H, Yamanashi T, Watanabe

M, et al: Real-time automatic surgical phase recognition in

laparoscopic sigmoidectomy using the convolutional neural

network-based deep learning approach. Surg Endosc. 34:4924–4931.

2020.PubMed/NCBI View Article : Google Scholar

|

|

22

|

Hernández A, Robles de Zulueta P, Spagnolo

E, Soguero C, Cristobal I, Pascual I, López A and Ramiro-Cortijo D:

Deep learning to measure the intensity of indocyanine green in

endometriosis surgeries with intestinal resection. J Pers Med.

12(982)2022.PubMed/NCBI View Article : Google Scholar

|

|

23

|

Twinanda AP, Yengera G, Mutter D,

Marescaux J and Padoy N: RSDNet: Learning to predict remaining

surgery duration from laparoscopic videos without manual

annotations. IEEE Trans Med Imaging. 38:1069–1078. 2019.PubMed/NCBI View Article : Google Scholar

|

|

24

|

Bodenstedt S, Wagner M, Mündermann L,

Kenngott H, Müller-Stich B, Breucha M, Torge Mees S, Weitz J and

Speidel S: Prediction of laparoscopic procedure duration using

unlabeled, multimodal sensor data. Int J Comput Assist Radiol Surg.

14:1089–1095. 2019.PubMed/NCBI View Article : Google Scholar

|

|

25

|

Igaki T, Kitaguchi D, Kojima S, Hasegawa

H, Takeshita N, Mori K, Kinugasa Y and Ito M: Artificial

intelligence-based total mesorectal excision plane navigation in

laparoscopic colorectal surgery. Dis Colon Rectum. 65:e329–e333.

2022.PubMed/NCBI View Article : Google Scholar

|

|

26

|

Kumazu Y, Kobayashi N, Kitamura N, Rayan

E, Neculoiu P, Misumi T, Hojo Y, Nakamura T, Kumamoto T, Kurahashi

Y, et al: Automated segmentation by deep learning of loose

connective tissue fibers to define safe dissection planes in

robot-assisted gastrectomy. Sci Rep. 11(21198)2021.PubMed/NCBI View Article : Google Scholar

|

|

27

|

Moglia A, Morelli L, D'Ischia R, Fatucchi

LM, Pucci V, Berchiolli R, Ferrari M and Cuschieri A: Ensemble deep

learning for the prediction of proficiency at a virtual simulator

for robot-assisted surgery. Surg Endosc. 36:6473–6479.

2022.PubMed/NCBI View Article : Google Scholar

|

|

28

|

Zheng Y, Leonard G, Zeh H and Fey AM:

Frame-wise detection of surgeon stress levels during laparoscopic

training using kinematic data. Int J Comput Assist Radiol Surg.

17:785–794. 2022.PubMed/NCBI View Article : Google Scholar

|

|

29

|

Yang GZ, Cambias J, Cleary K, Daimler E,

Drake J, Dupont PE, Hata N, Kazanzides P, Martel S, Patel RV, et

al: Medical robotics-Regulatory, ethical, and legal considerations

for increasing levels of autonomy. Sci Robot.

2(eaam8638)2017.PubMed/NCBI View Article : Google Scholar

|

|

30

|

Nagy TD and Haidegger T: Performance and

capability assessment in surgical subtask automation. Sensors

(Basel). 22(2501)2022.PubMed/NCBI View Article : Google Scholar

|

|

31

|

Shademan A, Decker RS, Opfermann JD,

Leonard S, Krieger A and Kim PCW: Supervised autonomous robotic

soft tissue surgery. Sci. Transl. Med. 8(337ra64)2016.PubMed/NCBI View Article : Google Scholar

|

|

32

|

Saeidi H, Ge J, Kam M, Opfermann JD,

Leonard S, Joshi AS and Krieger A: Supervised autonomous

electrosurgery via biocompatible near-infrared tissue tracking

techniques. IEEE Trans Med Robot Bionics. 1:228–236.

2019.PubMed/NCBI View Article : Google Scholar

|

|

33

|

Saeidi H, Le HND, Opfermann JD, Leonard S,

Kim A, Hsieh MH, Kang JU and Krieger A: Autonomous laparoscopic

robotic suturing with a novel actuated suturing tool and 3D

endoscope. IEEE Int Conf Robot Autom. 2019:1541–1547.

2019.PubMed/NCBI View Article : Google Scholar

|

|

34

|

Kam M, Saeidi H, Wei W, Opfermann JD,

Leonard S, Hsieh MH, Kang JU and Krieger A: Semi-autonomous robotic

anastomoses of vaginal cuffs using marker enhanced 3D imaging and

path planning. Med Image Comput Comput Assist Interv. 11768:65–73.

2019.PubMed/NCBI View Article : Google Scholar

|

|

35

|

Saeidi H, Opfermann JD, Kam M, Raghunathan

S, Leonard S and Krieger A: A confidence-based shared control

strategy for the Smart Tissue Autonomous Robot (STAR). Rep US.

1268-1275:2018.PubMed/NCBI View Article : Google Scholar

|

|

36

|

Saeidi H, Opfermann JD, Kam M, Wei W,

Leonard S, Hsieh MH, Kang JU and Krieger A: Autonomous robotic

laparoscopic surgery for intestinal anastomosis. Sci Robot.

7(eabj2908)2022.PubMed/NCBI View Article : Google Scholar

|

|

37

|

Hannaford B, Rosen J, Friedman DW, King H,

Roan P, Cheng L, Glozman D, Ma J, Kosari SN and White L: Raven-II:

An open platform for surgical robotics research. IEEE Trans Biomed

Eng. 60:954–959. 2013.PubMed/NCBI View Article : Google Scholar

|

|

38

|

Hu D, Gong Y, Seibel EJ, Sekhar LN and

Hannaford B: Semi-autonomous image-guided brain tumour resection

using an integrated robotic system: A bench-top study. Int J Med

Robot. 14(e1872)2018.PubMed/NCBI View

Article : Google Scholar

|

|

39

|

Gao Q, Tan N and Sun Z: A hybrid

learning-based hysteresis compensation strategy for surgical

robots. Int J Med Robot. 17(e2275)2021.PubMed/NCBI View

Article : Google Scholar

|