Introduction

Coronavirus disease 2019 (COVID-19) is an infectious

disease, caused by the new coronavirus which was first observed in

Wuhan, China in December 2019. On the 11th of March 2020, the World

Health Organization (WHO) declared this outbreak as a pandemic. As

of the 8th of July 2020, more than 11.5 million people have been

confirmed to have contracted the virus and more than half a million

have died due to complications of the disease (1). The clinical symptoms of COVID-19 are

non-specific and in most of the cases include fever, cough, fatigue

and dyspnea (2). Obesity (3), chronic cardiovascular diseases

(4) and smoking habits (5) have also been reported to contribute to

the deterioration of the disease. Toxic stressors in urban

environments could have played a role in deteriorating the immune

system of the local population forming the basis for the spreading

of COVID-19 disease (6). In a

similar context, Tsatsakis et al (7) explored the association among

human-induced pollutants found in greenhouse gases, the effect on

the immune system and other environmental aspects related to the

COVID-19 pandemic. Early diagnosis is important not only for prompt

treatment planning but also for isolation of patients in order to

prevent spreading the virus to the community. Currently, reverse

transcription-polymerase chain reaction (RT-PCR) represents the

gold-standard for diagnosing COVID-19. However, testing with RT-PCR

shows limited sensitivity (SN) which, adding to the shortage of

testing kits and the increased waiting time for results, increase

the screening burden and delays the isolation procedure (8,9). Thus,

the scientific community has been searching alternative protocols

for timely and accurate diagnosis. X-ray and chest computed

tomography (CT) imaging could be used as a reliable and rapid

approach for COVID-19 screening (9,10).

Both methods have the potential to depict COVID-19 related chest

abnormalities, with ground-glass lung opacification and

consolidation showing bilateral and subpleural or diffuse

distribution representing the cardinal findings (9,11-16).

Although, the image acquisition is easy and fast, interpretation

can be challenging and time-consuming, especially for inexperienced

and subspecialized medical professionals. In order to eliminate

such drawbacks, the scientific community has been shifting its

focus towards developing automated tools for the analysis of

imaging data. In this context, several artificial intelligence (AI)

methods have been developed to provide a prediction for the disease

and preliminary severity assessment. Tsiknakis et al

(17) proposed an Interpretable

Convolutional Neural Network (CNN) based on transfer learning for

predicting COVID-19 against viral and bacterial pneumonia and

normal cases based on more than 400 X-ray images, achieving an area

under curve (AUC) of 100%. Apostolopoulos and Mpesiana (18) also developed a CNN for predicting

COVID-19 from almost 1,500 X-ray images, achieving an accuracy

(ACC) of 96.78%, SN of 98.66% and specificity (SPC) of 96.46%.

However, compared to X-ray images, CT scans provide a more detailed

overview of the internal structure of lung parenchyma due to the

lack of overlapping tissues. Thus, recent research has focused on

the development of effective AI methods based on CT scans. Li et

al (19) developed a 3D CNN for

predicting COVID-19 against community acquired pneumonia (CAP) from

3D CT scans. Their model was trained on a fairly large dataset of

4,356 CT scans from 3,322 patients, 1,296 of which referred to

COVID-19 positive patients, and achieved SN of 90%, SPC of 96% and

an AUC equal to 95% regarding the COVID-19 class. Their proposed

model consists of several identical ResNet50 models, one for each

CT image. The feature maps of all the backbone models were combined

through a max pooling layer, which was followed by a dense layer

for the final ternary classification. The main downside of such an

approach is that only one ground truth label per exam is available,

and not one for each layer of the CT scan. Optimally, for achieving

a finer and detailed network performance, each CT layer should be

graded individually. In this way, the model would benefit from the

inter-CT-layer relations of the 3D input, as well as from the

extended ground truth information. Wang et al (20) proposed a CNN model which was first

trained on CT scans with cancer from 4,106 patients in order to

learn lung features. Subsequently, they fine-tuned the model on a

COVID-19 multi-centric CT dataset (709 patients). Their model was

validated on 4 independent datasets from different clinical

centers. Their approach performed well in identifying COVID-19

against bacterial or other viral pneumonia cases, achieving an AUC

of 87%, SN of 80.39% and SPC of 76.61% against bacterial pneumonia

and AUC of 86%, SN of 79.35% and SPC of 71.43% against viral

pneumonia. In addition, they stratified the patients into high and

low risk groups, in order to propose a hospital-stay time schedule.

Zhang et al (21) utilized

another network to segment the lung regions, before applying the

classification network in order to discard the background and

irrelevant regions within the CT scan. Their proposed pipeline

achieved an ACC of 91.2%, SN of 94.03%, SPC of 88.46% and an AUC of

96.1% for predicting COVID-19 on a prospective Chinese dataset,

while similar results were achieved on two other prospective

Chinese datasets. Subsequently, the model was applied on a dataset

from Ecuador, ACC of 84.11%, SN of 86.67%, SPC of 82.26% and AUC of

90.5%. In addition, they evaluated the effect of drug treatment on

lesion size and volume changes using their AI model. Finally, they

compared their model to 8 junior and 4 mid-senior radiologists, for

predicting COVID-19 on an independent dataset which was annotated

by 4 independent senior radiologists. The model outperformed the

junior radiologists, while its performance was comparable to that

of the mid-senior ones. Ardakani et al (22) utilized 10 pre-trained convolutional

networks as the backbone of their classifier, achieving the best

AUC score of 99.4%, SN of 100%, SPC of 99% and ACC of 99.5%, for

distinguishing COVID-19 pneumonia from other types of pneumonia

(viral and bacterial). At the same time, the performance of a

radiologist was moderate, achieving much lower results, i.e., AUC

of 87.3%, SN of 89%, SPC of 83% and ACC of 86%. However, their

dataset included a limited amount of data, namely 1,020 CT slices

for 108 COVID-19 positive patients and 86 pneumonia positive

patients. Additionally, plenty of unpublished scientific preprints

have been available on open databases, claiming high accuracy, SN

and SPC scores (23-29),

for predicting COVID-19 against other pneumonia types or healthy

patients based on deep learning models.

In this study, we propose an extensive pipeline for

automatic COVID-19 screening against other types of pneumonia

(i.e., viral and bacterial) from CT scans, utilizing lung

segmentation for increasing the accuracy. Some key innovations of

this study can be summarized in the integration of

multi-institutional and open-access data from a variety of scanners

and imaging protocols retrieved from online repositories in formats

such as DICOM or Portable network Graphics (png), the development

of a deep learning lung segmentation model for multiple CT window

settings and finally a deep learning model for differentiating

COVID-19 from CAP. This study introduces a state-of-the-art deep

learning model for lung segmentation on slices with a variety of CT

window settings (DSC 99.6%) and an image analysis deep model

trained with multi-institutional data for differentiating COVID-19

from CAP (AUC 96.1%).

Materials and methods

Dataset

The proposed analysis was performed using open data

of confirmed COVID-19 and widely available online cases. In

particular, the patient cohort consisted of 3 datasets (30-32)

with 1,266 COVID-19 and 586 pneumonia cases stratified on a

patient-basis resulting in 5,109 CT slices. Furthermore, slices

characterized other than COVID-19 or pneumonia such as normal were

discarded. The curated individual slices were collected from

patients in hospitals from São Paulo in Brazil (30) and open repositories such as medRxiv

(https://www.medrxiv.org/) and bioRxiv (https://www.biorxiv.org/) (31). Additionally, these datasets were

made available as stand alone 8-bit image files with unknown

compression methodology, no metadata information available and

various CT window settings rendering segmentation and

classification tasks with traditional methods challenging. In

contrast, the dataset (32)

consisted of 20 cases with COVID-19 pixel-based annotated regions

of interest in meta-image form (NifTi files) from the open

repositories of Radiopedia (http://radiopedia.org) and Coronacases (http://coronacases.org). This multi-institutional

collection of CT examinations lead to high variability in spacing,

pixel array size and windowing type across the examined cases.

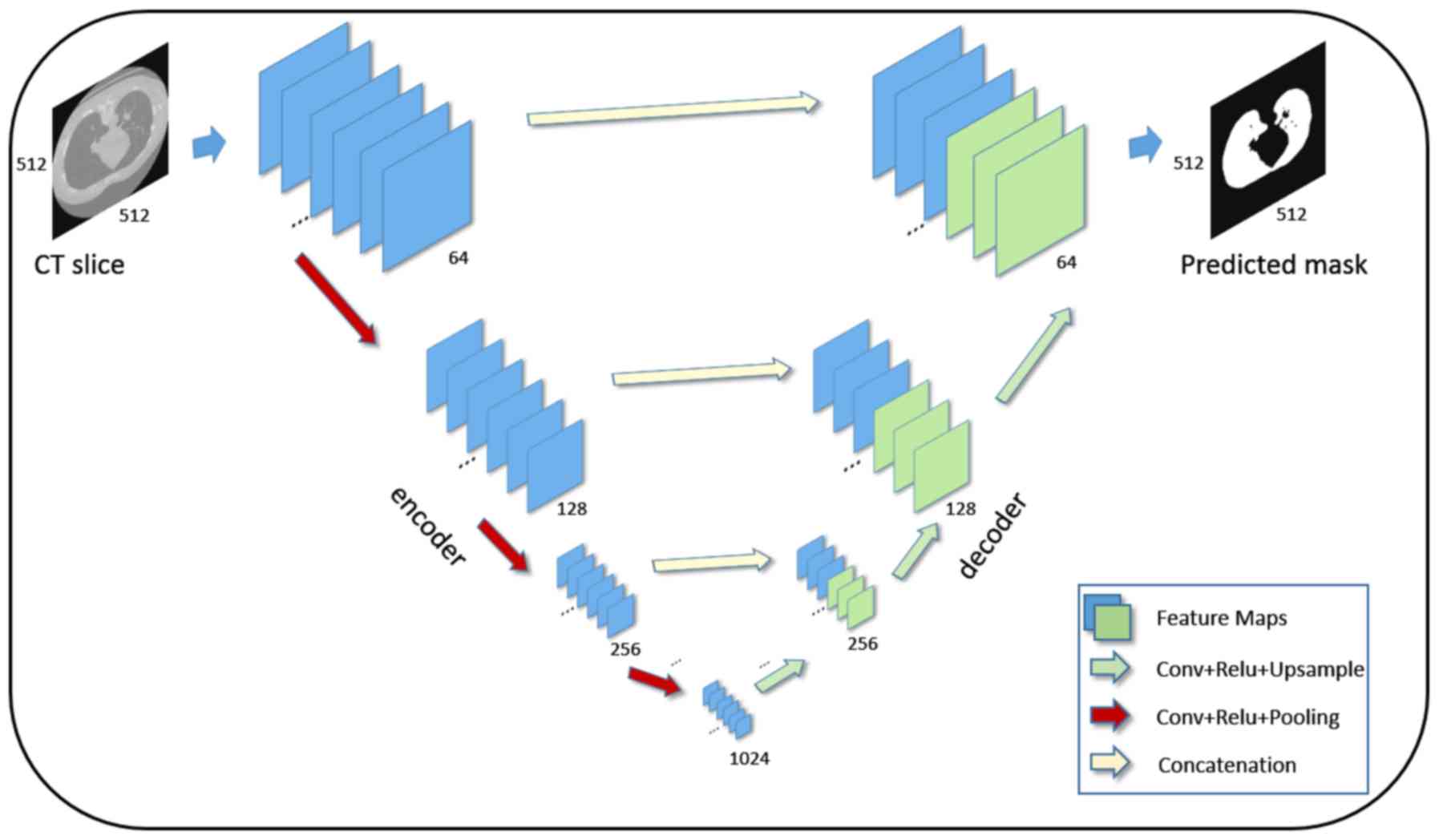

Lung segmentation

U-Net (33) is a

fully-convolutional architecture comprising multiple consecutive

convolutional, pooling layers in the encoder part and

convolutional, upsampling layers in the decoder part. The deep

network can accurately match incoming CT examination slices to

their corresponding segmentation mask. This process is learned from

data with known segmentation masks through the back-propagation of

the similarity error during the training phase. The Lung Image

Database Consortium-Image Database Resource Initiative (LIDC-IDRI)

(34) dataset was used for training

and evaluating the deep learning segmentation model. The ground

truth masks for lung segmentation were extracted by a

fully-automated Hounsfield Units (HU) based algorithm (35). A subset of the 1,018 scans with

98,433 CT slices was used for model convergence. A detailed view of

the architecture is depicted in (Fig.

1).

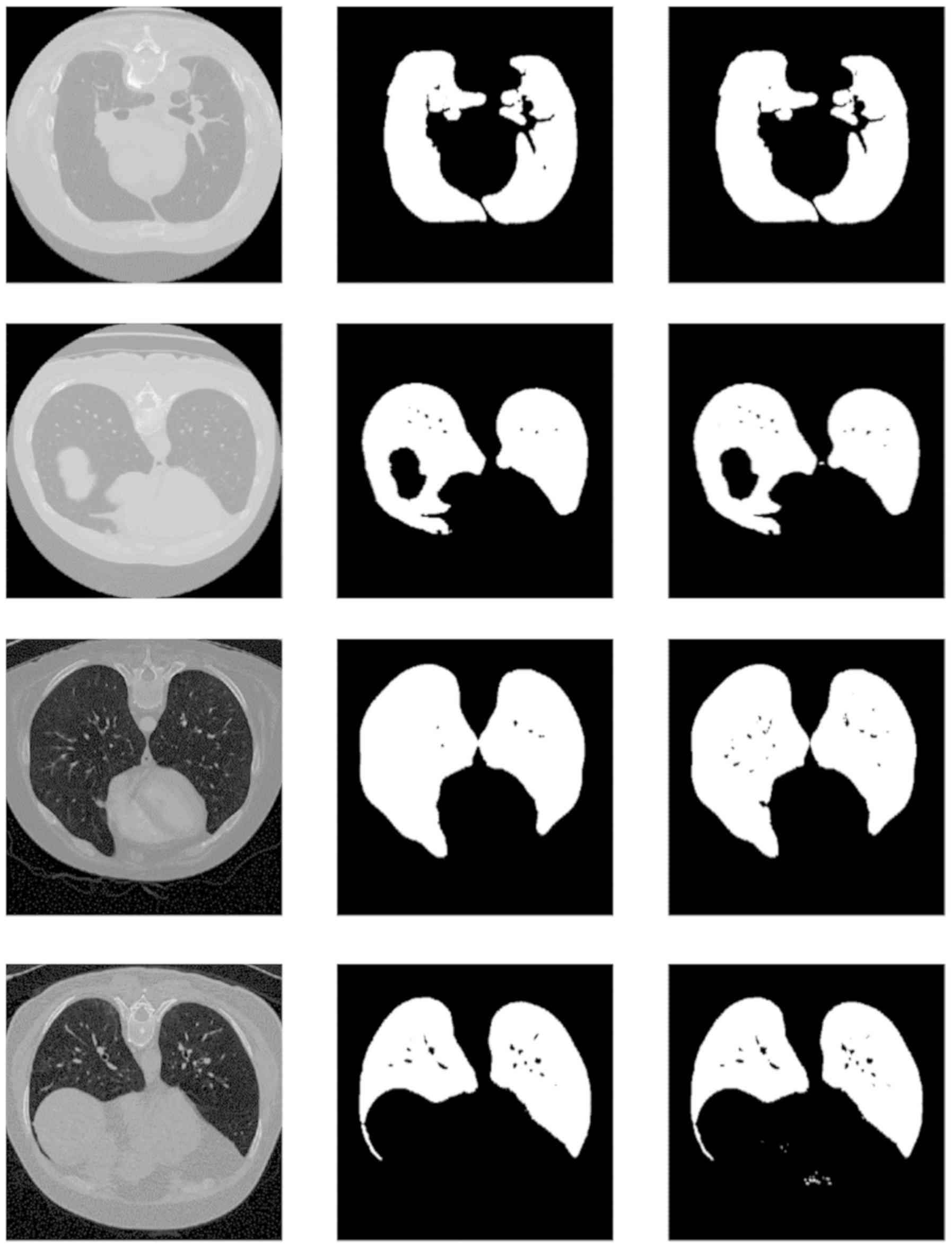

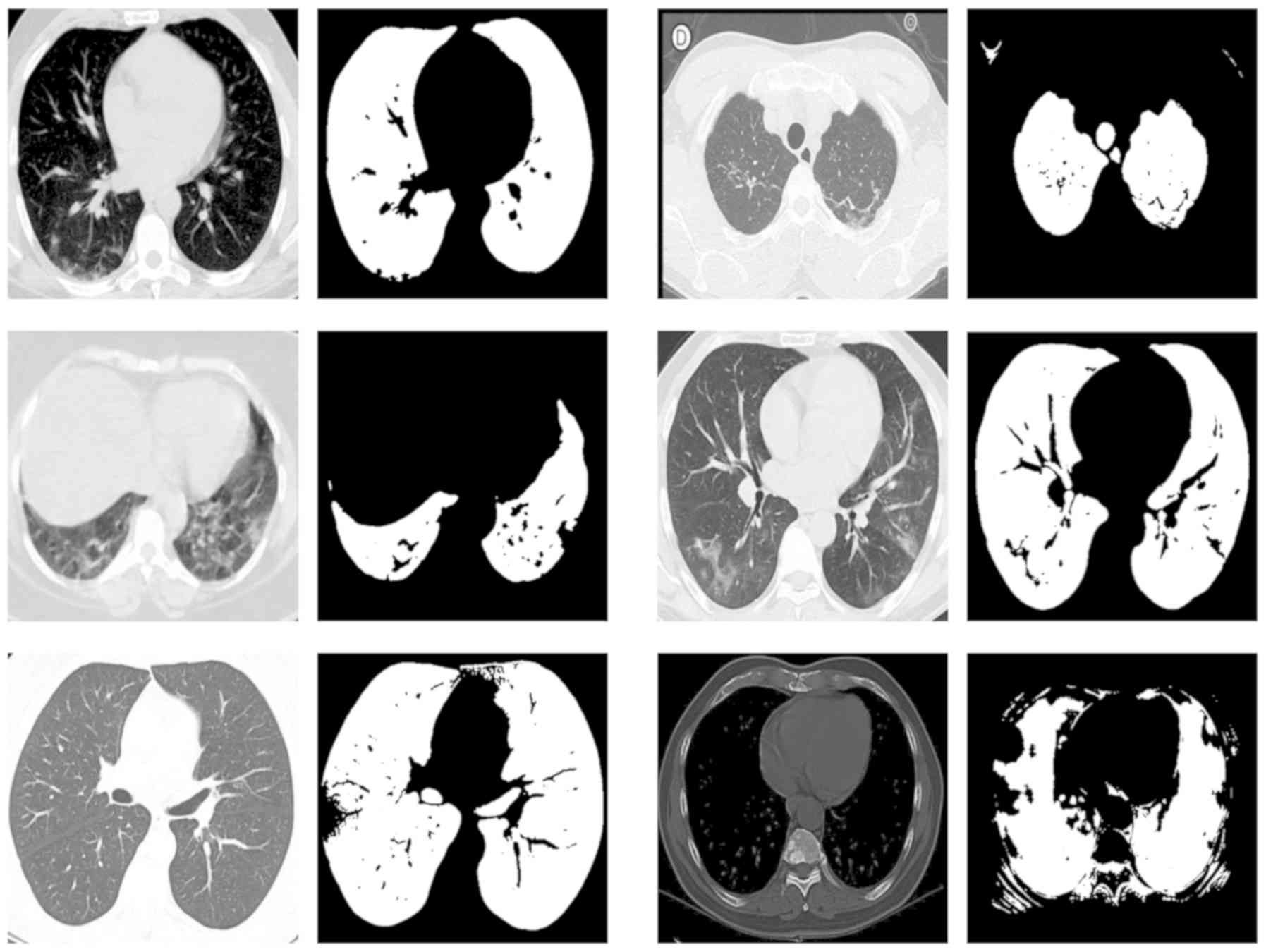

Pre-processing

The image resolution of the examined patient cohort

varied from 148x61 to 1,637x1,225 pixels introducing a significant

limitation for the deep learning analysis. Each slice was resized

with a linear interpolation technique targeting 512x512 pixels to

match the required dimension of input layer of the U-Net

segmentation network. The slices of the examined cohort were

segmented with the custom U-Net and normalized to achieve pixel

values with unit variance and zero mean prior to the deep learning

analysis. In Fig. 2 the testing set

ground truth masks versus predicted masks are presented revealing a

highly performing segmentation model and in Fig. 3 a randomly selected set of predicted

segmentation masks from the COVID-19 dataset are demonstrated.

Despite the aforementioned variability in pixel array dimensions

and CT window settings the proposed methodology establishes minimal

segmentation error.

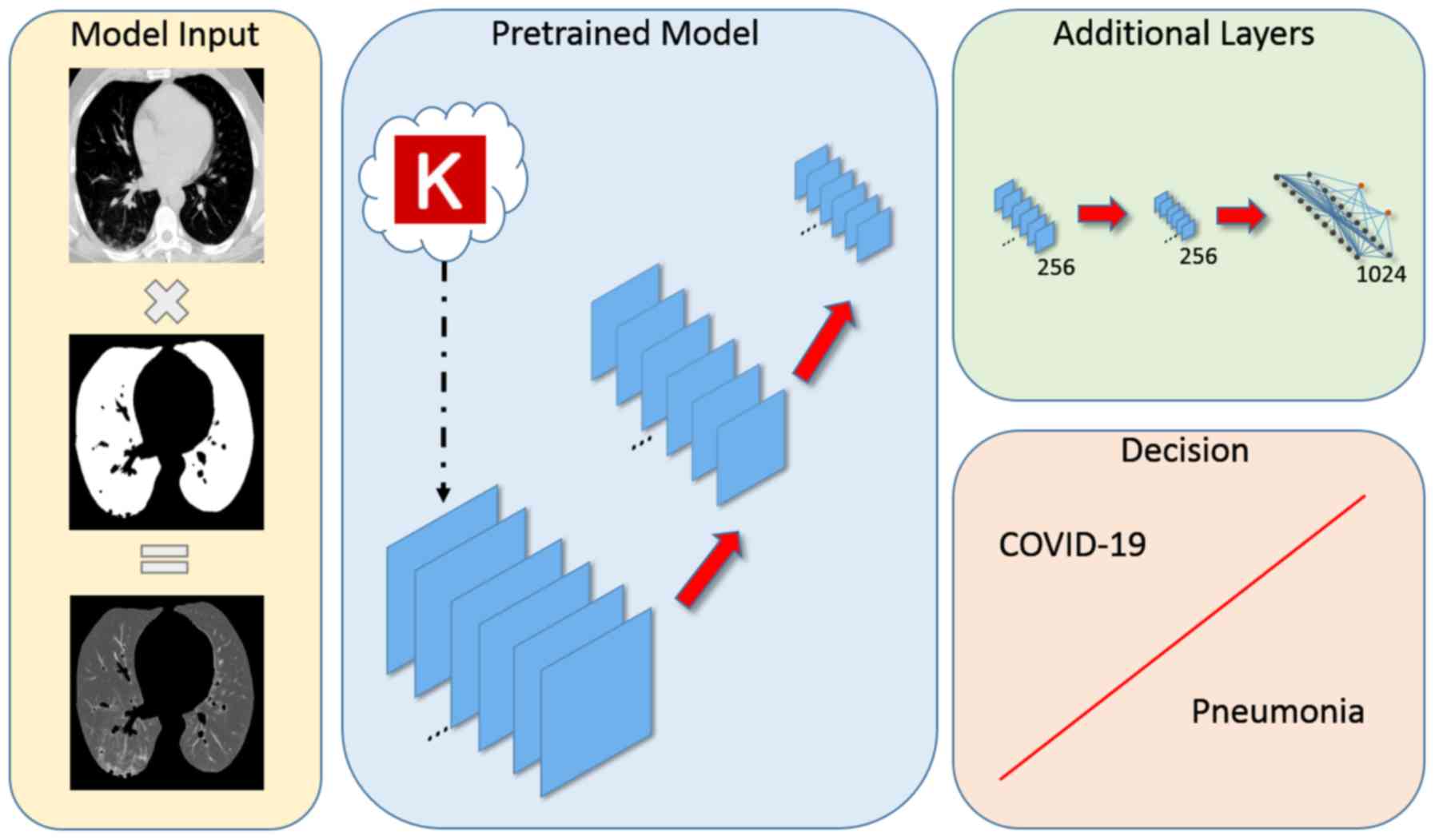

Deep architecture analysis

A transfer learning approach was used for improving

the convergence and fine-tuning process based on CT slices of the

examined cohort by adapting the inner representation of the

pre-trained model to the targeted COVID-19 versus CAP analysis. Two

additional convolutional layers with 256 filters each and a neural

network with 1,000 neurons were appended in the original

pre-trained model as depicted in Fig.

4. The source code and the detailed hyperparameter

configuration files are provided online (https://github.com/trivizakis/ct-covid-analysis).

Performance evaluation

The evaluation was assessed by following metrics of

ACC, SN, SPC and precision (PR):

Results

A 4-fold cross-validation process was performed for

splitting the dataset in the convergence and the testing set. The

convergence set was further randomly split into the training (80%)

and validation set (20%) for applying the fine-tuning and

hyperparameter optimization process respectively. The testing folds

remained unseen throughout the analysis to assess the performance

of the proposed deep learning model. A custom U-Net for lung

parenchyma segmentation was trained and evaluated on a total set of

109,370 LIDC-IDRI CT slices with ground truth segmentation masks

calculated on a HU basis by an automated algorithm. The U-Net

converged after 5 epochs of training and achieved a performance of

Dice Similarity Coefficient of 99.55% in the testing set providing

a reliable and accurate segmentation model as evident by the

randomly selected examples presented in Fig. 3. Although, perfect segmentation

masks cannot be obtained for the analysis dataset due to the

variability in image quality; the majority of pathological pixels

are present in the final segmented image despite some false

negative pixels. Several pre-trained models were examined including

VGG (36), Inception (37), NASNet (38), DenseNet (39) and MobileNet (40) with additional convolutional and

neural network layers as depicted in Fig. 4. The analysis architecture was

trained on average for 16 epochs before early-stopping. Most of

pre-trained models preformed comparably with an AUC up to 93% and

the highest performance (AUC 96.1%) was achieved with VGG-19 as the

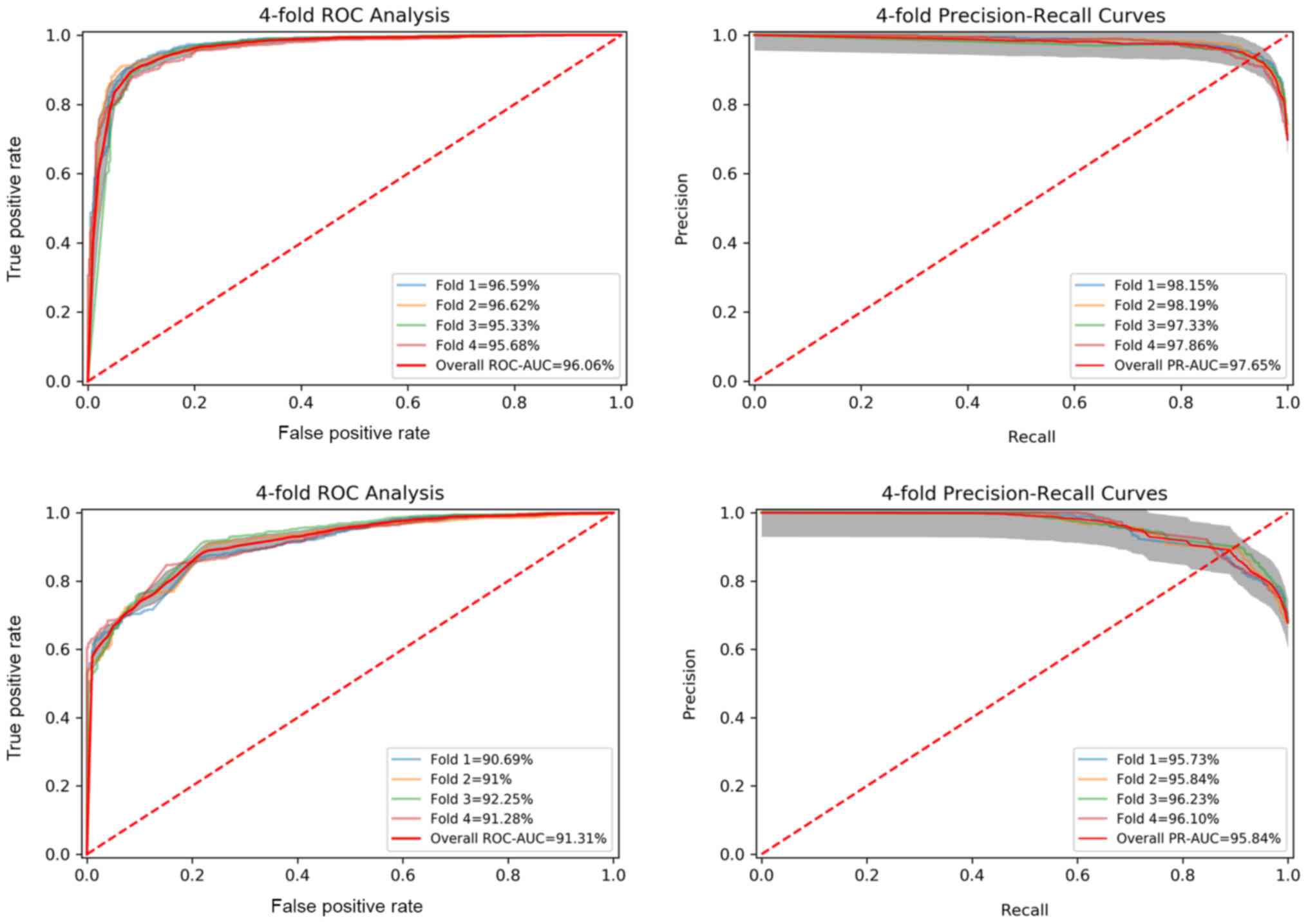

backend pre-trained network. The receiver operating characteristic

(ROC) and PR-recall curves of the best model are presented in

Fig. 5 (top) outlining the

robustness and constancy of the examined analysis. The proposed

deep learning architecture with a robust pre-processing protocol

including lung segmentation and image standardization outperforms

the baseline model (up-to AUC 91.3%) with similar architecture but

with unsegmented CT slices and the current literature (Table I) with similar experimental

protocols.

| Table IPerformance of the proposed analysis

in comparison to the current peer-reviewed research with similar

end-points. |

Table I

Performance of the proposed analysis

in comparison to the current peer-reviewed research with similar

end-points.

| Type % | ACC | SN | SPC | AUC | Patients, no.

(training/testing) | CT Slices, no.

(training/testing) |

|---|

| Proposed with

segmentation | 91.1 | 92.0 | 87.5 | 96.1 | COVID-19: 894/372

CAP: 414/172 | COVID-19: 2,047/853

CAP: 976/406 |

| Proposed no lung

segmentation | 85.1 | 88.8 | 76.8 | 91.3 | | |

| Li et al

(19) | - | 90.0 | 96.0 | 95.0 | COVID-19: 400/68

CAP: 1396/155 Non-pneumonia: 1,173/130 | COVID-19: 1,165/127

CAP: 1,560/175 Non-pneumonia: 1,193/132 |

| Wang et al

(20) | - | 80.4 | 76.6 | 87.0 | All: 709/557 | - |

| Zhang et al

(21) | 91.2 | 94.0 | 88.5 | 96.0 | COVID-19: 752 CAP:

797 Non-pneumonia: 697 | All: 444.034 |

| Ardakani et

al (22) | 99.5 | 100.0 | 99.0 | 99.4 | COVID-19: 108

Non-COVID-19: 86 | COVID-19: 510

Non-COVID-19: 510 Training/testing (All): 816/102 |

| Harmon et al

(42) | 90.8 | 84.0 | 93.0 | 94.8 | COVID-19: 451/276

Non-COVID-19: 533/1,011 | 3D classification

model |

Discussion

The proposed analysis integrates deep learning lung

segmentation for removing irrelevant organs/landmarks and transfer

learning for discriminating between COVID-19 and other types of

pneumonia from CT imaging data with multiple window settings. The

segmentation model was trained on openly available CT data

(LIDC-IDRI) providing state-of-the-art performance even in datasets

such as the examined COVID-19 (Fig.

3) from various institutes with different CT scanners, no DICOM

metadata available and variability in image quality. Thus, the

transfer learning-based analysis was focused only on the lung

region resulting in higher performance (AUC 96.1%) significantly

advancing the baseline of 91.3% without segmented CT slices of the

examined dataset. The fact that during inference, suspicious or

disputable CT slices selected for analysis by the deep model should

have been identified by an expert radiologist from the raw CT

volume, might be considered as a limitation since a fully automated

diagnosis is not possible. That said, as presented in Table I, the proposed methodology

outperforms the current literature (19-21)

in terms of AUC performance. In particular, the deep model proposed

by Zhang et al (21)

demonstrates similar performance with the present analysis but the

examined CT slices were manually segmented on lesion basis before

classification (COVID-19 versus common pneumonia versus normal

patients). Ardakani et al (22) claims a model with an AUC performance

of 99.4%, however, it utilizes a different experimental protocol

than the examined herein, in which patches (60 by 60 pixels) were

extracted by an experienced radiologist for the analysis requiring

additional and time-consuming labor from a clinical practice

perspective.

In conclusion, the present study is addressing

limitations of other efforts (22,27,41)

where the analysis was applied on raw CT data with more demanding

preprocessing phase and uniform data quality. This is further

highlighted by the increased performance stability demonstrated by

the low prediction variability among deep models of each fold in

both ROC (standard deviation 0.55%) and PR (standard deviation

0.34%) curves as depicted in Fig. 5

(top).

Acknowledgements

Not applicable.

Funding

Part of this study was financially supported by the

Stavros Niarchos Foundation within the framework of the project

ARCHERS (‘Advancing Young Researchers' Human Capital in Cutting

Edge Technologies in the Preservation of Cultural Heritage and the

Tackling of Societal Challenges’).

Availability of data and materials

Not applicable.

Authors' contributions

ET, NT and KM conceived and designed the study. ET,

NT and KM researched the literature, performed analysis and

interpretation of data and drafted the manuscript. EEV, GZP, AHK,

NP, DAS, DS and AT critically revised the article for important

intellectual content and assisted in the literature search for this

article. All authors agree to be accountable for all aspects of the

work in ensuring that questions related to the accuracy or

integrity of any part of the work are appropriately investigated,

and finally approved the version of the manuscript to be

published.

Ethics approval and consent to

participate

Not applicable.

Patient consent for publication

Not applicable.

Competing interests

DAS is the Editor-in-Chief for the journal, but had

no personal involvement in the reviewing process, or any influence

in terms of adjudicating on the final decision, for this article.

All the other authors declare that they have no competing

interests.

References

|

1

|

World Health Organization (WHO): WHO

Coronavirus Disease (COVID-19) Dashboard. WHO, Geneva, 2020.

https://covid19.who.int/.

Accessed August 1, 2020.

|

|

2

|

World Health Organization (WHO): Report of

the WHO-China Joint Mission on Coronavirus Disease 2019 (COVID-19).

WHO, Geneva, 2020. https://www.who.int/docs/default-source/coronaviruse/who-china-joint-mission-on-covid-19-final-report.pdf.

Accessed February 28, 2020.

|

|

3

|

Petrakis D, Margină D, Tsarouhas K, Tekos

F, Stan M, Nikitovic D, Kouretas D, Spandidos DA and Tsatsakis A:

Obesity a risk factor for increased COVID-19 prevalence, severity

and lethality (Review). Mol Med Rep. 22:9–19. 2020.PubMed/NCBI View Article : Google Scholar

|

|

4

|

Docea AO, Tsatsakis A, Albulescu D,

Cristea O, Zlatian O, Vinceti M, Moschos SA, Tsoukalas D, Goumenou

M, Drakoulis N, et al: A new threat from an old enemy: Re-emergence

of coronavirus (Review). Int J Mol Med. 45:1631–1643.

2020.PubMed/NCBI View Article : Google Scholar

|

|

5

|

Farsalinos K, Niaura R, Le Houezec J,

Barbouni A, Tsatsakis A, Kouretas D, Vantarakis A and Poulas K:

Editorial: Nicotine and SARS-CoV-2: COVID-19 may be a disease of

the nicotinic cholinergic system. Toxicol Rep. 7:658–663.

2020.PubMed/NCBI View Article : Google Scholar

|

|

6

|

Kostoff RN, Briggs MB, Porter AL,

Hernández AF, Abdollahi M, Aschner M and Tsatsakis A: The

under-reported role of toxic substance exposures in the COVID-19

pandemic. Food Chem Toxicol: Aug 14, 2020 (Epub ahead of

print).

|

|

7

|

Tsatsakis A, Petrakis D, Nikolouzakis TK,

Docea AO, Calina D, Vinceti M, Goumenou M, Kostoff RN, Mamoulakis

C, Aschner M, et al: COVID-19, an opportunity to reevaluate the

correlation between long-term effects of anthropogenic pollutants

on viral epidemic/pandemic events and prevalence. Food Chem

Toxicol. 141(111418)2020.PubMed/NCBI View Article : Google Scholar

|

|

8

|

Xie X, Zhong Z, Zhao W, Zheng C, Wang F

and Liu J: Chest CT for typical coronavirus disease 2019 (COVID-19)

pneumonia: Relationship to negative RT-PCR testing. Radiology.

296:E41–E45. 2020.PubMed/NCBI View Article : Google Scholar

|

|

9

|

Ai T, Yang Z, Hou H, Zhan C, Chen C, Lv W,

Tao Q, Sun Z and Xia L: Correlation of chest CT and RT-PCR testing

for coronavirus disease 2019 (COVID-19) in China: A Report of 1014

cases. Radiology. 296:E32–E40. 2020.PubMed/NCBI View Article : Google Scholar

|

|

10

|

Fang Y, Zhang H, Xie J, Lin M, Ying L,

Pang P and Ji W: Sensitivity of chest CT for COVID-19: comparison

to RT-PCR. Radiology. 296:E115–E117. 2020.PubMed/NCBI View Article : Google Scholar

|

|

11

|

Chen N, Zhou M, Dong X, Qu J, Gong F, Han

Y, Qiu Y, Wang J, Liu Y, Wei Y, et al: Epidemiological and clinical

characteristics of 99 cases of 2019 novel coronavirus pneumonia in

Wuhan, China: A descriptive study. Lancet. 395:507–513.

2020.PubMed/NCBI View Article : Google Scholar

|

|

12

|

Holshue ML, DeBolt C, Lindquist S, Lofy

KH, Wiesman J, Bruce H, Spitters C, Ericson K, Wilkerson S, Tural

A, et al: Washington State 2019-nCoV Case Investigation Team: First

case of 2019 novel coronavirus in the United States. N Engl J Med.

382:929–936. 2020.

|

|

13

|

Wang D, Hu B, Hu C, Zhu F, Liu X, Zhang J,

Wang B, Xiang H, Cheng Z, Xiong Y, et al: Clinical characteristics

of 138 hospitalized patients with 2019 novel coronavirus-infected

pneumonia in Wuhan, China. JAMA. 323:1061–1069. 2020.PubMed/NCBI View Article : Google Scholar

|

|

14

|

Li Q, Guan X, Wu P, Wang X, Zhou L, Tong

Y, Ren R, Leung KSM, Lau EHY, Wong JY, et al: Early transmission

dynamics in Wuhan, China, of novel coronavirus-infected pneumonia.

N Engl J Med. 382:1199–1207. 2020.PubMed/NCBI View Article : Google Scholar

|

|

15

|

Chung M, Bernheim A, Mei X, Zhang N, Huang

M, Zeng X, Cui J, Xu W, Yang Y, Fayad ZA, et al: CT imaging

features of 2019 novel coronavirus (2019-NCoV). Radiology.

295:202–207. 2020.PubMed/NCBI View Article : Google Scholar

|

|

16

|

Huang C, Wang Y, Li X, Ren L, Zhao J, Hu

Y, Zhang L, Fan G, Xu J, Gu X, et al: Clinical features of patients

infected with 2019 novel coronavirus in Wuhan, China. Lancet.

395:497–506. 2020.PubMed/NCBI View Article : Google Scholar

|

|

17

|

Tsiknakis N, Trivizakis E, Vassalou EE,

Papadakis GZ, Spandidos DA, Tsatsakis A, Sánchez-García J,

López-González R, Papanikolaou N, Karantanas AH, et al:

Interpretable artificial intelligence framework for COVID-19

screening on chest X-rays. Exp Ther Med. 20:727–735.

2020.PubMed/NCBI View Article : Google Scholar

|

|

18

|

Apostolopoulos ID and Mpesiana TA:

Covid-19: automatic detection from X-ray images utilizing transfer

learning with convolutional neural networks. Phys Eng Sci Med.

43:635–640. 2020.PubMed/NCBI View Article : Google Scholar

|

|

19

|

Li L, Qin L, Xu Z, Yin Y, Wang X, Kong B,

Bai J, Lu Y, Fang Z, Song Q, et al: Using artificial intelligence

to detect COVID-19 and community-acquired pneumonia based on

pulmonary CT: Evaluation of the diagnostic accuracy. Radiology.

296:E65–E71. 2020.PubMed/NCBI View Article : Google Scholar

|

|

20

|

Wang S, Zha Y, Li W, Wu Q, Li X, Niu M,

Wang M, Qiu X, Li H, Yu H, et al: A fully automatic deep learning

system for COVID-19 diagnostic and prognostic analysis. Eur Respir

J. 56(2000775)2020.PubMed/NCBI View Article : Google Scholar

|

|

21

|

Zhang K, Liu X, Shen J, Li Z, Sang Y, Wu

X, Zha Y, Liang W, Wang C, Wang K, et al: Clinically applicable AI

system for accurate diagnosis, quantitative measurements, and

prognosis of COVID-19 pneumonia using computed tomography. Cell.

181:1423–1433.e11. 2020.PubMed/NCBI View Article : Google Scholar

|

|

22

|

Ardakani AA, Kanafi AR, Acharya UR, Khadem

N and Mohammadi A: Application of deep learning technique to manage

COVID-19 in routine clinical practice using CT images: Results of

10 convolutional neural networks. Comput Biol Med.

121(103795)2020.PubMed/NCBI View Article : Google Scholar

|

|

23

|

Song Y, Zheng S, Li L, Zhang X, Zhang X,

Huang Z, Chen J, Zhao H, Jie Y, Wang R, et al: Deep learning

enables accurate diagnosis of novel coronavirus (COVID-19) with CT

images. medRxiv: doi: https://doi.org/10.1101/2020.02.23.20026930.

|

|

24

|

Zheng C, Deng X, Fu Q, Zhou Q, Feng J, Ma

H, Liu W and Wang X: Deep learning-based detection for COVID-19

from chest CT using weak label medRxiv: doi: https://doi.org/10.1101/2020.03.12.20027185.

|

|

25

|

Gozes O, Frid-Adar M, Greenspan H,

Browning PD, Zhang H, Ji W, Bernheim A and Siegel E: Rapid AI

development cycle for the coronavirus (COVID-19) pandemic: Initial

results for automated detection and patient monitoring using deep

learning CT image analysis. arXiv:2003.05037.

|

|

26

|

Shan F, Gao Y, Wang J, Shi W, Shi N, Han

M, Xue Z, Shen D and Shi Y: Lung infection quantification of

COVID-19 in CT images with deep learning. arXiv:2003.04655.

|

|

27

|

Kassani SH, Kassasni PH, Wesolowski MJ,

Schneider KA and Deters R: Automatic detection of coronavirus

disease (COVID-19) in x-ray and CT images: A machine learning-based

approach. arXiv:2004.10641.

|

|

28

|

Hu S, Gao Y, Niu Z, Jiang Y, Li L, Xiao X,

Wang M, Fang EF, Menpes-Smith W, Xia J, et al: Weakly supervised

deep learning for COVID-19 infection detection and classification

from CT images. IEEE Access. 8:118869–118883. 2020.

|

|

29

|

Chen X, Yao L and Zhang Y: Residual

attention U-Net for automated multi-class segmentation of COVID-19

chest CT images. arXiv:2004.05645.

|

|

30

|

Soares E, Angelov P, Biaso S, Froes MH and

Abe DK: SARS-CoV-2 CT-scan dataset: A large dataset of real

patients CT scans for SARS-CoV-2 identification. medRxiv: doi:

https://doi.org/10.1101/2020.04.24.20078584.

|

|

31

|

Yang X, He X, Zhao J, Zhang Y, Zhang S and

Xie P: COVID-CT-Dataset: A CT scan dataset about COVID-19.

arXiv:2003.13865.

|

|

32

|

Ma J, Ge C, Wang Y, An X, Gao J, Yu Z,

Zhang M, Liu X, Deng X, Cao S, et al: COVID-19 CT lung and

infection segmentation dataset. Zenodo: http://doi.org/10.5281/zenodo.3757476.

|

|

33

|

Ronneberger O, Fischer P and Brox T:

U-net: Convolutional networks for biomedical image segmentation.

In: Lecture Notes in Computer Science (including subseries Lecture

Notes in Artificial Intelligence and Lecture Notes in

Bioinformatics). Vol 9351. Springer Verlag, pp234-241, 2015.

|

|

34

|

Armato III S, McLennan G, Bidaut L,

McNitt-Gray M, Meyer C, Reeves A, Zhao B, Aberle D, Henschke C,

Clarke L, et al: The Lung Image Database Consortium (LIDC) and

Image Database Resource Initiative (IDRI): a completed reference

database of lung nodules on CT scans. Med Phys. 38:915–931.

2011.PubMed/NCBI View Article : Google Scholar

|

|

35

|

gitHub: wanwanbeen/ct_lung_segmentation:

Robust segmentation of lung and airway in CT scans. https://github.com/wanwanbeen?tab=repositories.

Updated November 29, 2017.

|

|

36

|

Simonyan K and Zisserman A: Very deep

convolutional networks for large-scale image recognition.

arXiv:1409.1556.

|

|

37

|

Szegedy C, Vanhoucke V, Ioffe S, Shlens J

and Wojna Z: Rethinking the inception architecture for computer

vision. In: Proceedings of the 2016 IEEE Conference on Computer

Vision and Pattern Recognition (CVPR), Las Vegas, NV, pp2818-2826,

2016.

|

|

38

|

Zoph B, Vasudevan V, Shlens J and Le QV:

Learning transferable architectures for scalable image recognition.

arXiv:1707.07012v4.

|

|

39

|

Huang G, Liu Z, van der Maaten L and

Weinberger KQ: Densely connected convolutional networks.

arXiv:1608.06993.

|

|

40

|

Sandler M, Howard A, Zhu M, Zhmoginov A

and Chen LC: MobileNetV2: Inverted Residuals and Linear

Bottlenecks. The IEEE Conference on Computer Vision and Pattern

Recognition (CVPR), pp4510-4520, 2018.

|

|

41

|

He X, Yang X, Zhang S, Zhao J, Zhang Y,

Xing E and Xie P: Sample-Efficient Deep Learning for COVID-19

Diagnosis Based on CT Scans medRxiv: doi: https://doi.org/10.1101/2020.04.13.20063941.

|

|

42

|

Harmon SA, Sanford TH, Xu S, Turkbey EB,

Roth H, Xu Z, Yang D, Myronenko A, Anderson V, Amalou A, et al:

Artificial intelligence for the detection of COVID-19 pneumonia on

chest CT using multinational datasets. Nat Commun.

11(4080)2020.PubMed/NCBI View Article : Google Scholar

|