Introduction

Cervical cancer is one of the most common malignant

tumors in women worldwide and in China (1), 80% of which is made up of the cervical

squamous cell carcinoma (2). In

2018, there were 106,430 new cases and 47,739 deaths due to

cervical cancer in China (3). The

average morbidity and mortality are on the increase with annual

rates of 8.7% and 8.1%, respectively (4). However, precursor lesions with regards

to the diagnosis of cervical cancer could reduce its incidence and

improve the patient's quality of life.

Effective early screening technology that detects

precursor lesions includes the Pap smear and the ThinPrep Cytologic

Test (TCT). Previous reports showed that the TCT has higher

sensitivity (5), and is one of the

most common cervical cancer screening methods (6). The diagnosis from TCT samples is mainly

dependent on a pathologist's interpretation under a microscope,

according to the Bethesda report system (7). This system defines six categories of

cervical squamous cells: Negative for intraepithelial lesion or

malignancy (NILM), atypical squamous cells-unclear meaning

(ASC-US), atypical squamous cells-do not rule out high-grade

squamous intraepithelial lesions (ASC-H), low-grade squamous

intraepithelial lesions (LSIL), high-grade squamous intraepithelial

lesions (HSIL), and squamous cell carcinoma (SCC). The first

category, NILM, indicates the presence of normal cells, while the

remaining five categories indicate the presence of abnormal cells.

The degree of morphological abnormality is the key to defining the

lesion grade, including the shape, size, and boundary of the

nucleus and the characteristics of the chromatin. However, accurate

diagnosis of cervical squamous cells by pathologists is

time-consuming and subjective, with the classification of samples

influenced by cellular complexity and the pathologist's previous

experience interpreting TCT samples.

Advances in artificial intelligence (AI) technology,

especially the deep convolution neural networks (DCNN), has

obtained great success in medical research, including skin lesion

classification (8) and acute

lymphoblastic leukemia diagnosis (9). The DCNN often contains several layers

with convolutional kernels, similar to a mathematical model that

contains numerous parameters; thus, a large amount of data is

needed to train kernels. Unlike traditional algorithms (10), DCNN is not limited to features that

are extracted based on the experience of expertise. Instead, it

uses the original images as input, automatically extracts a large

number of hierarchical features, and finally generates a diagnosis

as an output. Many researchers have focused on digital cervical

pathology, where DCNN is used to provide a diagnosis of cervical

cancer (11–16). Abdulkerim et al (11) and Chankong et al (12) segmented the cervical cells for

diagnosis. Zhang et al (13)

and Wu et al (14) classified

the cervical lesion grades in single cell images. Tian et al

(15) and Liu et al (16) simultaneously detected a lesion

location and classified the lesion degree in whole pathological

images without prior cell segmentation or cropping.

Although previous studies have advanced the use of

DCNN in cervical cancer screening, the studies face three major

limitations. First, those studies usually focused on PAP-smear

images (11–14). This early-screening method has been

largely replaced by TCT-staining, but researchers have not yet

examined the use of DCNN to diagnose cervical cancer from TCT

images. Second, to improve and evaluate the comprehensiveness and

robustness of DCNN models, multi-center datasets, which includes

data from different levels of hospitals, are needed. However,

previous studies have only included data from one center (14–15).

Third, in order to analyze the results of the AI diagnoses,

researchers should compare the results with those from the manual

interpretation completed by pathologists. However, few studies have

generated such comparisons (17).

The aim of the present study was to address the

limitations of previous studies that have examined the utility of

AI in cervical cancer detection by building a TCT dataset from

samples collected from different hospitals and creating an

automated pipeline for the normal and abnormal cervical squamous

cell classification using VGG16, which is a popular DCNN model. The

results were compared with the classifications provided by two

different pathologists.

Materials and methods

TCT image collection

TCT specimens were obtained from a total of 82

patients in four hospitals located in the city of Chongqing, China

(Table I).

| Table I.Characteristics of the TCT specimens

used to form the dataset. |

Table I.

Characteristics of the TCT specimens

used to form the dataset.

| Variables | Daping

Hospitala (n=38) | Armed Police General

Hospital (n=10) | Hi-Tech People's

Hospital (n=16) | Du Deling Clinic

(n=18) |

|---|

| Age (years) | 43 (20–66) | 48 (36–62) | 42 (25–57) | 54 (34–56) |

| Cells | 1,184 | 776 | 1,115 | 215 |

| Training | 977 | 625 | 889 | 178 |

| Testing | 207 | 151 | 226 | 37 |

The inclusion criteria in this study were: i)

Patients aged ≥18 years, ii) satisfactory TCT specimens that

contained ≥2,000 squamous epithelial cells with clear morphologies,

and iii) the diagnostic results of the TCT had to be one of the

precursor lesions subtypes or cervical cancer. This study was

approved by the Ethics Committee of the Army Military Medical

University (approval no. KY201774) and was conducted in accordance

with the Helsinki Declaration. The study was explained to all the

patients, and oral informed consent was obtained from them for

their sample to be used for scientific research by phone when

images were collected. Written patient consent was not required,

according to the guidance of the ethics committee.

A total of 1736 abnormal cells and 1554 normal cell

samples were obtained, which were randomly divided into training

(n=2669) and test (n=621) datasets at a ratio of 4:1. All images

were in the BMP file format.

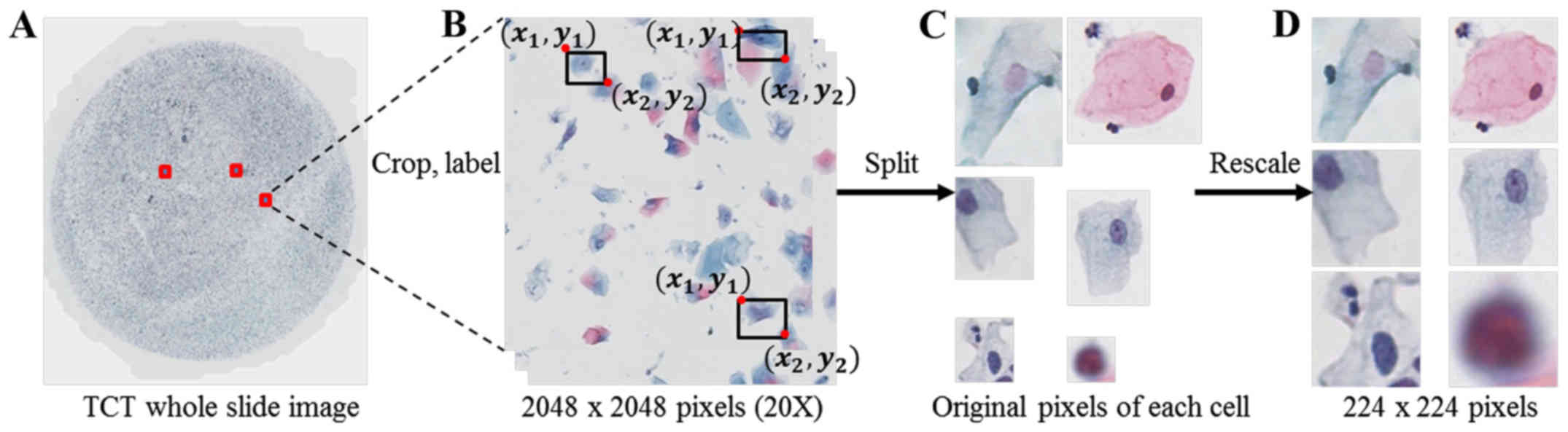

Data preprocessing

TCT specimens were digitized to images using a

microscope with a 40× objective lens (model: VS120, Brand: Olympus)

and cut into 2048×2048 pixels for labeling (Fig. 1). Two clinical pathologists

independently labelled the squamous epithelial cells with bounding

boxes as NILM, ASC-US, LSIL, ASC-H, HSIL, and SCC using LabelImg

software, which is a graphical image annotation tool (https://github.com/tzutalin/labelImg),

according to the Bethesda reporting system. After labeling, all

data were split into single-cell images according to the labeled

coordinates of bounding boxes and rescaled to 224×224 pixels

according to the shortest side (Fig.

1). To reduce the inter- and intra-observer variability, an

annotated review was conducted of the original pixel images of the

single cells one month following the initial labeling.

In order to prevent the problem of over-fitting,

where the model may not accurately predict additional data, data

augmentation on the training set using image flipping was

performed. Data normalization was completed to ease the redundant

image differences caused by the different environments and staining

workflow of multiple hospitals (18).

Development of the DCNN model

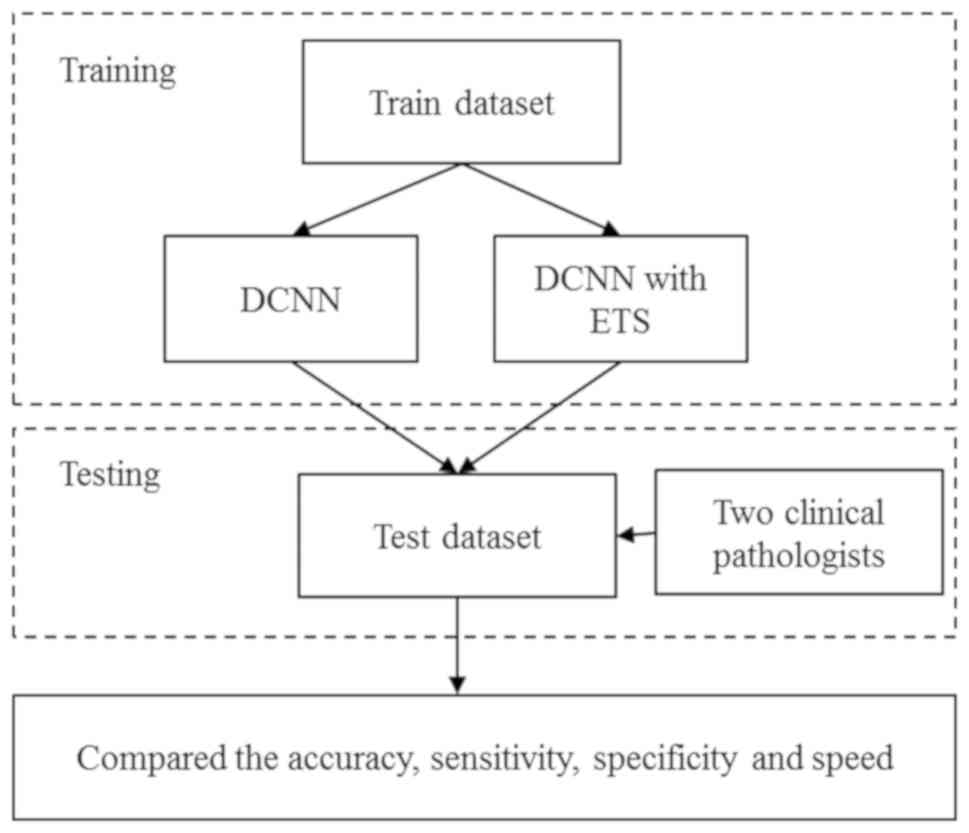

The development of the DCNN model followed a

protocol of training and then testing (Fig. 2). The results of the model were

compared with that independently obtained by two experienced

pathologists. An Intel Core I7-7800X 3.50GHz * 12 with four Titan X

GPUs using Python 3.5 (https://www.python.org) and the TensorFlow (https://www.tensorflow.org) library for all

experiments were used. The number of training iterations was set to

2,000, and the initial learning rate was set to 0.01. The optimizer

was selected via the Batch Gradient Descent.

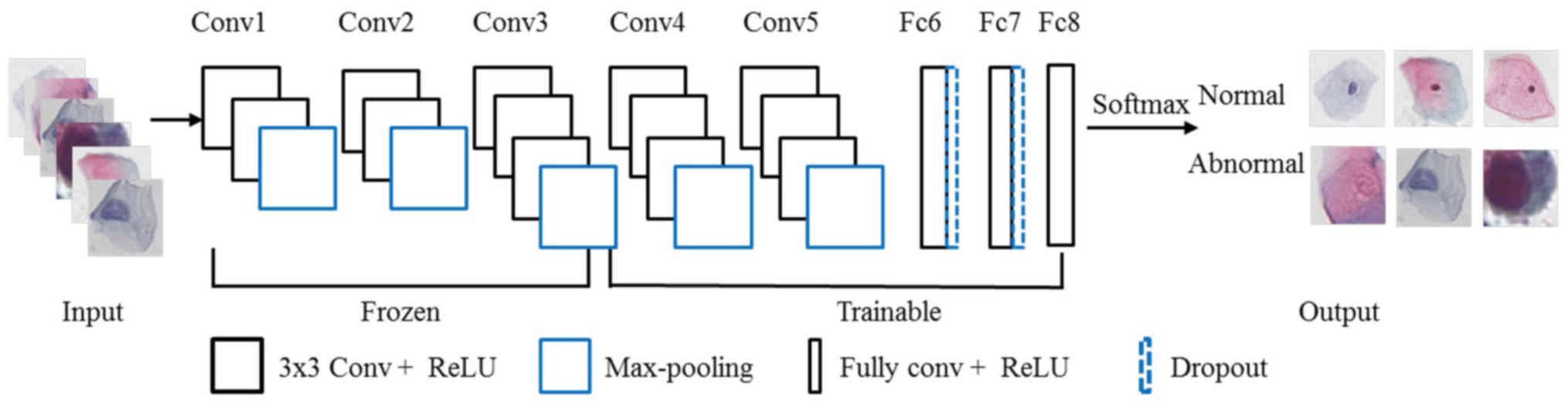

Through previous literature and preliminary

experiments, the VGG16 model demonstrated a balance in framework,

accuracy, computational efficiency and proven performance in the

medical field, and was chosen for our experiments (19–21). The

model comprises 16 layers, including 13 convolutional layers with

3×3 convolution kernels and 3 fully connected layers. At the same

time, there are also five max-pooling layers for feature

dimensionality reduction and two drop-out layers to prevent

over-fitting. First, the original single-cell images were input

into the network and represented by a matrix. Then, the

convolutional layer and the max-pooling layer extracted the deep

features and mapped them onto a hidden layer. The fully connected

layers distributed the features onto the sample tag space. Finally,

the last fully connected layer with a softmax classifier was used

to predict the classification probability (Fig. 3). In our experiment, the activation

functions were measured as Rectified Linear Units (ReLU).

An ensemble training strategy (ETS) was proposed

based on 5-fold cross-validation and was employed in our training

stage. In our strategy, the training set was randomly divided into

five equal parts, 4/5 for training and the remaining 1/5 for

validation. This division was repeated five times, and the previous

model was used as a pre-training model for the next iteration each

time. The last repeated model parameter indicated the final

result.

Transfer learning was used to improve the

generalizability of the model (22).

The dataset was used to fine-tune the DCNN model that was trained

on the ImageNet dataset (23). To

reduce computational complexity, the first seven layers of the DCNN

network were frozen, and the remaining nine layers were trainable

in the training stage.

We further improved the model generalizability and

prevented over-fitting with early-stopping (24,25). The

classical stochastic gradient descent optimizer was selected, and

the learning rate changed adaptively with the introduction of a

variable factor G, defined as:

G:=(Lti-Lvi)2ddLVi

where, Lit indicates the loss

value at the ith iteration in the training stage, and

Liv stands for the loss value in the

ith iteration in the validation stage. When G is

greater than a threshold (set to 5 in our experiment), the learning

rate is adjusted to 0.1 times the current learning rate.

Evaluation

The performance of the proposed DCNN model was

evaluated by measuring its accuracy, precision, sensitivity,

F1 values, and speed. Accuracy was defined as the

proportion of the correct classified samples of the total samples,

and precision was defined as the ratio of the positive sample that

was correctly classified. Sensitivity was expressed as the ratio of

negative samples, which were correctly classified as the total

negative samples. F1 reflected the performance of the

model and is a composite index that reflects both sensitivity and

specificity. Speed was defined as the average time that one cell

was identified, and it was calculated to evaluate the efficiency of

the DCNN model. In addition, the independent component analysis

(ICA), which is a popular method to represent multivariate data

(26), was used to visualize the

features of cells in the test dataset before and after

classification by the DCNN model (27).

Two experienced pathologists (pathologist 1 and 2,

12-years and 3-years working experience, respectively) were invited

to classify the squamous epithelial cells independently, and their

performance was compared with that of the DCNN models.

Statistical analysis

The comparison of the classification accuracy

between the DCNN models and the pathologists was conducted by the

paired sample t-tests. The statistical analyses were performed

using SPSS software (version 16.0). P<0.05 was considered to

indicate a statistically significant difference.

Results

DCNN models and classification

accuracy

The accuracy, precision, sensitivity, and

F1 of the DCNN model with ETS achieved slightly better

performance in identifying lesion cells in the test dataset than

those of the model without ETS and the pathologists (Table II). The training strategy improved

the accuracy by 1.29%, the precision by 1.22%, the sensitivity by

1.12%, and the F1 value by 1.32%. Compared with

Pathologist 1, the DCNN model with ETS had 0.80% lower accuracy,

1.13% lower precision, 0.80% lower sensitivity, and 0.86% lower

F1 value. Compared with Pathologist 2, the DCNN model

with ETS had 0.33% higher accuracy, 0.85% higher precision, 1.47%

higher F1 value, but 0.5% lower sensitivity. When the

accuracy was examined by lesion subtype, the differences between

the accuracy of the models and that of each pathologist were not

significant (Table II; DCNN without

ETS vs. DCNN with ETS: P0=0.387>0.05, DCNN with ETS

vs. Pathologist 1: P1=0.771>0.05, DCNN with ETS vs.

Pathologist 2: P2=0.489>0.05). The accuracy rate of

the DCNN model with ETS varied by lesion subtype, with ASC-H and

HSIL having the highest accuracies (100%) and ASC-US having the

lowest (75%; Table III).

| Table II.Performance of the DCNN models and the

pathologists on the test dataset. |

Table II.

Performance of the DCNN models and the

pathologists on the test dataset.

| Variables | DCNN without ETS | DCNN with ETS | Pathologist 1 | Pathologist 2 |

|---|

| Accuracy (%) | 96.78 | 98.07 | 98.87 | 97.74 |

| Precision (%) | 96.69 | 97.91 | 99.10 | 97.06 |

| Sensitivity

(%) | 96.89 | 98.01 | 98.81 | 98.51 |

| F1

(%) | 96.77 | 98.09 | 98.95 | 96.62 |

| Speed (sec) | 0.0768 | 0.0732 | 0.4638 | 0.4992 |

| Table III.Accuracy of the model with ETS for

different lesion subtype classifications. |

Table III.

Accuracy of the model with ETS for

different lesion subtype classifications.

|

| Normal | Abnormal |

|---|

|

|

|

|

|---|

| Variables | NILM | ASC-US | ASC-H | LSIL | HSIL | SCC |

|---|

| Test dataset | 285 | 24 | 2 | 92 | 211 | 7 |

|

Correct-classified | 282 | 18 | 2 | 90 | 211 | 6 |

|

Misclassification | 3 | 6 | 0 | 2 | 0 | 1 |

| Accuracy (%) | 98.95 | 75 | 100 | 97.83 | 100 | 85.71 |

DCNN models and classification

efficiency

In the classification efficiency, the results showed

that the DCNN model with ETS achieved the fastest speed (0.0732

sec; Table II), and it was 0.0036

sec faster compared with that without ETS, 0.3906 sec faster

compared with Pathologist 1, and 0.4260 sec faster compared with

Pathologist 2 (Table II). The

classification speed of the DCNN models was almost six times faster

than that of the pathologists.

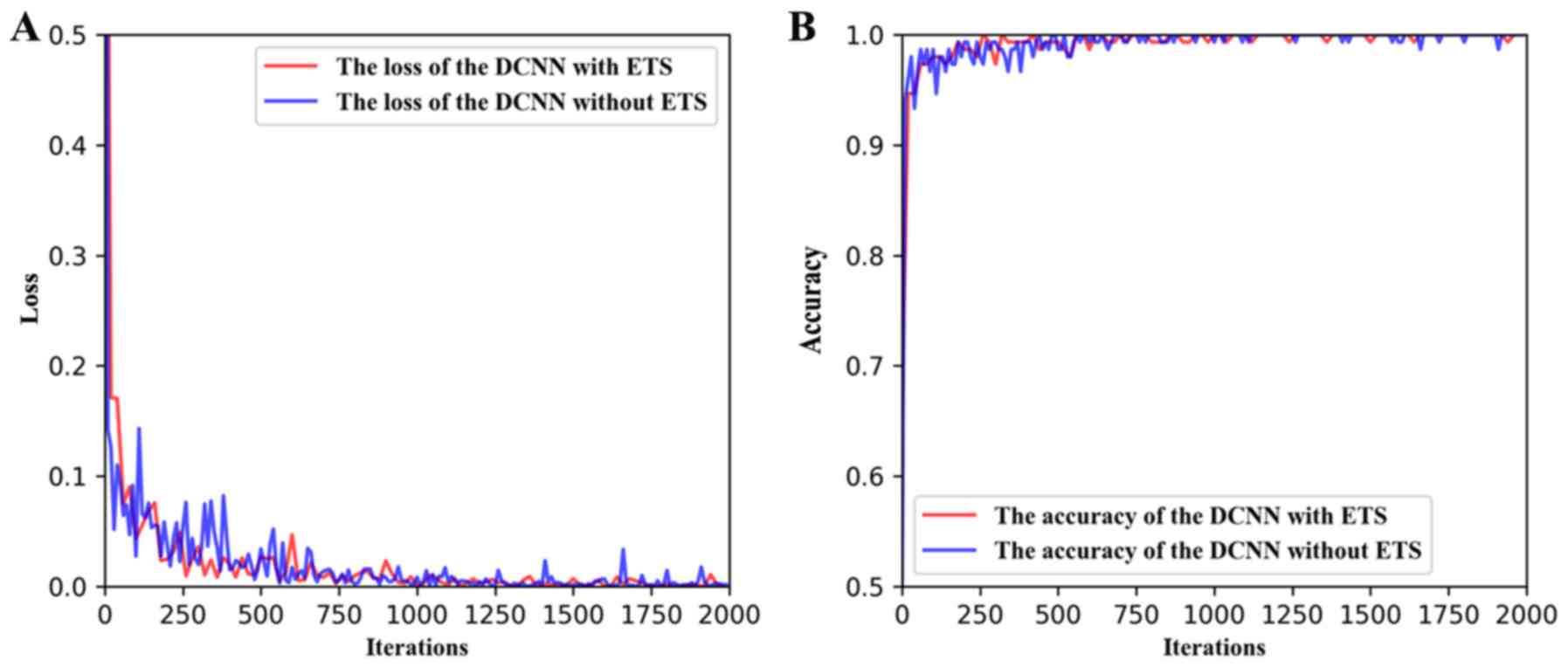

We visualized the loss and accuracy values during

the training stage of the DCNN models. The final loss values were

both <0.1, and the accuracy values were >0.9 after several

iterations (Fig. 4). However, the

values for the DCNN with ETS were always lower and smoother than

that without ETS, indicating that the DCNN model with the ETS had a

more stable performance (Fig.

4).

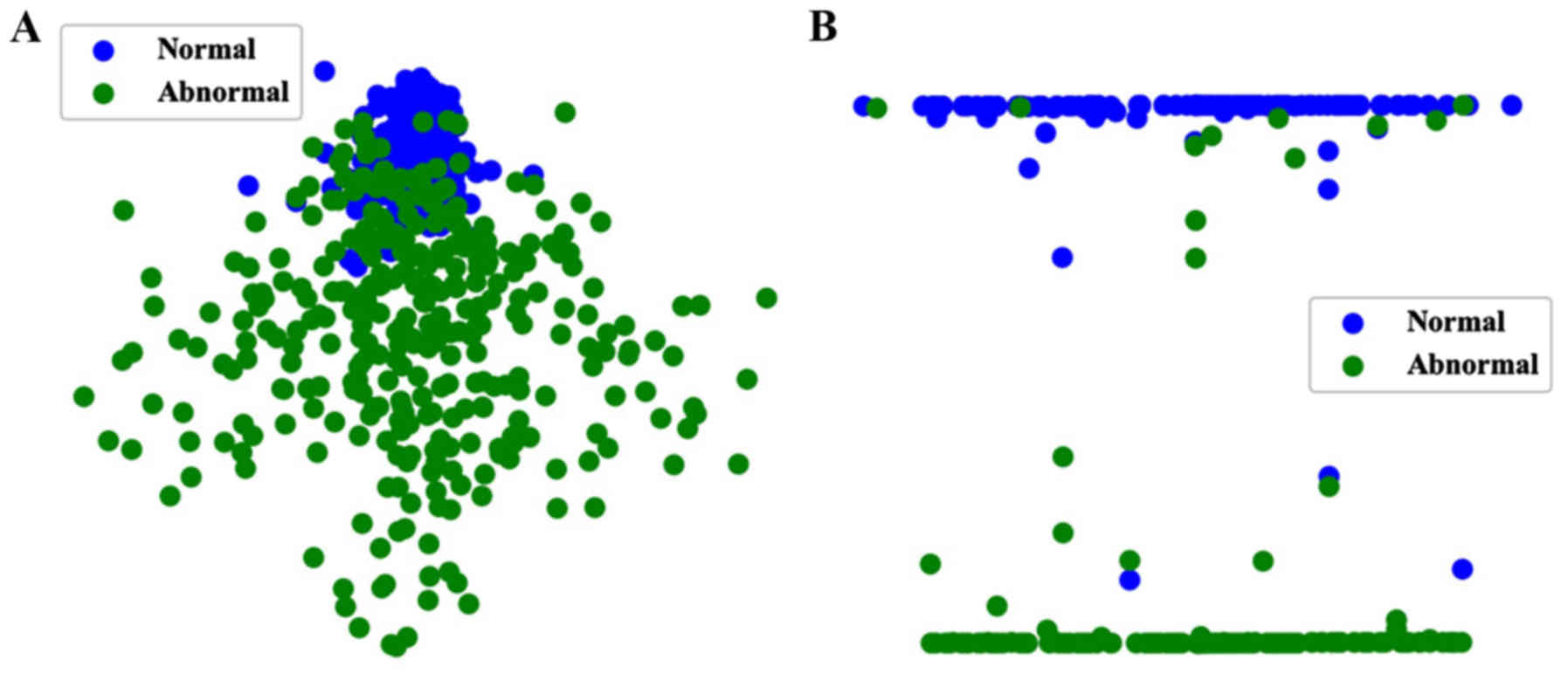

DCNN model and ICA

Visualization of the test dataset using ICA revealed

that the normal and abnormal cells were separated into two distinct

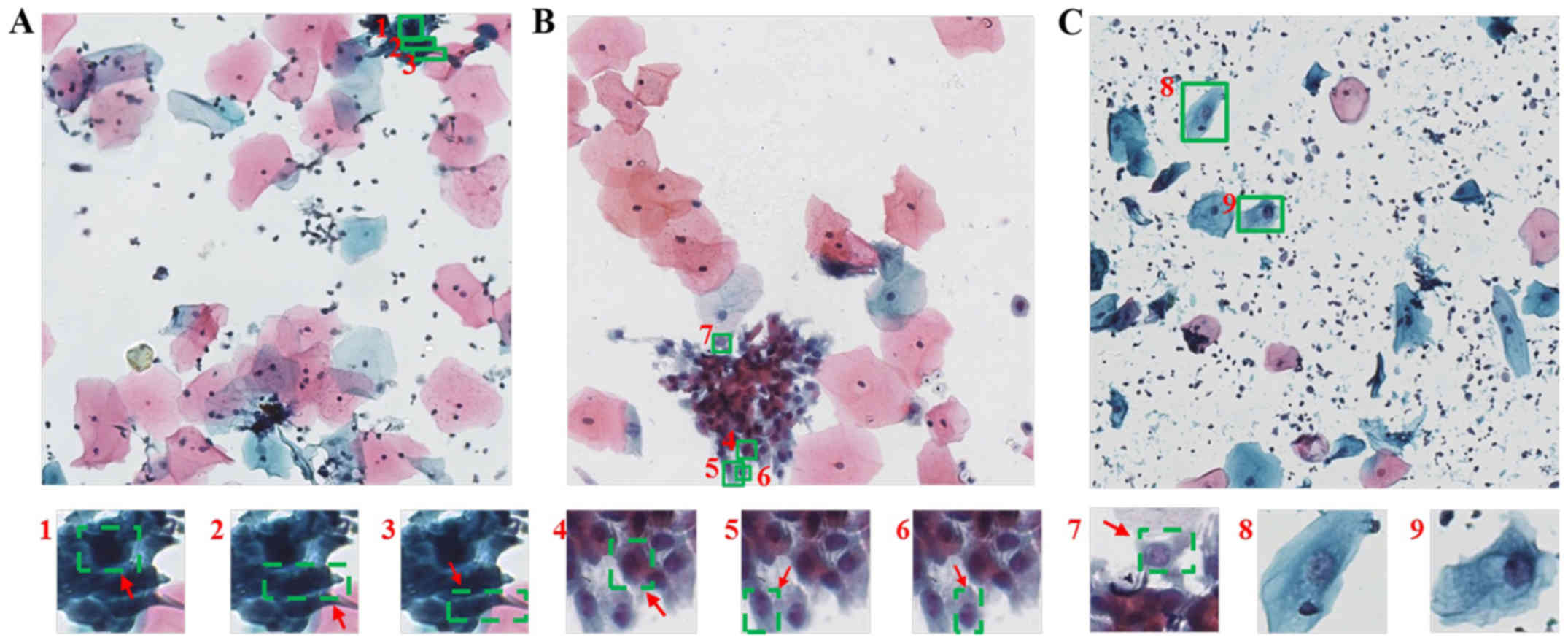

classes after identification with the DCNN model with ETS (Fig. 5). Simultaneously, we randomly showed

some cells which were classified correctly and put into 2048×2048

images (Fig. 6). It indicated that

the DCNN model could classify the cells which had unclear nuclei,

even in the cell cluster.

Discussion

In the present study, we developed a DCNN model

based on VGG16 for the automated classification of cervical

squamous cells that accurately differentiated between normal cells

and lesion cells in a multi-center TCT dataset. Compared to

classifications independently provided by two experienced

pathologists, the AI system achieved similar classification

accuracy with improved efficiency.

Our datasets were composed of many TCT images that

originated from four different hospitals that differed in their

trauma levels and TCT machines. These sources of variation provided

diversity within the dataset that was used to train and optimize

the DCNN algorithm, thereby improving the generalizability of this

model.

Relative to the large-scale dataset, like the

millions of ImageNet datasets (23),

required for the development of most DCNN models in the training

stage, our dataset is small. Therefore, we employed ETS based on

5-fold cross-validation, which improved the accuracy and speed

compared with the traditional DCNN model. At the same time, in

terms of model performance, the loss value obtained by the DCNN

model with ETS could be fitted better and faster than the model

without ETS. Even though the inclusion of ETS did not significantly

improve the accuracy, the incremental increase in accuracy may

still be clinically important. Future investigations will continue

to verify the classification effect of this method for future

studies.

The classification accuracy of the DCNN model with

ETS was 98.07% for our multi-center dataset. The ICA analysis found

that the characteristics of normal squamous epithelial cells before

classification were more concentrated, and the characteristics of

abnormal cells were scattered and polymorphic. This observation may

be mainly due to the presence of many subtypes in our test dataset,

including ASC-US, LSIL, ASC-H, HSIL, and SCC. The characteristics

of some abnormal cells are very similar to each other. Meanwhile,

the features of normal and abnormal cervical squamous epithelial

cells classified by the DCNN model were clearly distinguished,

which indicates that the DCNN could accurately classify cervical

squamous epithelial cells with fine-grained characteristics.

The DCNN model with ETS differed in classification

accuracy among lesion subtypes. SCC is the most direct evidence for

the diagnosis of cervical cancer (7). Compared with other precancerous cells,

SCC contains significant nuclear atypia, yet classifications with

our model only achieved 85.71% accuracy. This lower accuracy may be

due to the significantly smaller number of SCC cells than the

number of other classes in our training dataset (n=39), which is

called class-imbalance problem. The low classification accuracy for

ASC-US, 75%, may be due to the high similarity of features between

ASC-US, NSIL, and LSIL. In fact, even experienced pathologists can

experience difficulty distinguishing among these subtypes.

Observation of the cells which were correctly classified

demonstrated that even though the cells were isolated from cell

clusters, were small, had difficulty in distinguishing deep nuclear

staining, or had unclear nuclei, the model could still accurately

classify these samples.

The DCNN model developed here provides a similar

classification performance to that of the pathologists, but with a

highly improved classification efficiency. This result indicates

that our model could assist pathologists with the interpretation of

TCT specimens and reduce their workload. In the future, this highly

efficient automated method may be widely used in clinical settings

to overcome the subjective and time-consuming nature of the manual

diagnosis.

Researchers have previously proposed DCNN models to

classify samples of cervical cells. Zhang et al (13), used a DCNN to classify normal and

abnormal cervical cells and found accuracies of 98.3 and 98.6% for

the two public datasets, Herlev dataset and HEMLBC dataset,

respectively. However, the testing time for one cervical cell

averaged 3.5 sec. Wu et al (14), used a DCNN to classify keratinizing

squamous, non-keratinizing squamous, and basaloid squamous cells in

a single-center dataset, and achieved an accuracy of 93.33% (speed

was not reported). While the accuracies reported in these two

studies were high, the authors did not externally validate the

results by comparing classification with those provided by

pathologists. Furthermore, the diagnosis efficiency reported by

Zhang et al (13) did not

satisfy the need of the clinic.

In summary, our study focused on developing an

accurate and efficient automated method that could be used in

clinics to assist pathologists in interpreting TCT

early-screenings. There are four main limitations of the method

employed in the present study: i) We used images of single cells

that were extracted from whole slide images in advance. Future

studies should focus on detecting the cervical squamous lesion

cells in whole slide images without any prior segmentation or

splits. ii) Our study only included a binary classification of

cervical squamous cells. Future studies should focus on a

multi-classification model for cervical squamous cells. iii) The

small size of our dataset and the class-imbalance problem have a

negative effect on the results, thus more data should be collected

from clinics, especially on ASC-US, ASC-H, and SCC cells in the

future. iv) Our data was collected from the southwestern region of

China, and this geographically limited dataset may limit the

generalizability of the resulting model. Future studies should

focus on collecting data globally.

Acknowledgements

Not applicable.

Funding

The present study was supported by the National Key

Research and Development Plan (grant no. 2016YFC0106403), the

National Natural Science Foundation of China (grant nos. 31771324

and 61701506), the Military Medical Research Program Youth

Development Project (grant nos. 16QNP100 and 2018XLC3023) and the

Graduate Education and Teaching Reform Project of Chongqing (grant

no. yjg183144).

Availability of data and materials

The datasets analyzed during the present study are

not publicly available as they are part of an ongoing study.

Authors' contributions

LL conceived the study, analyzed the data, performed

the statistical analysis and drafted the initial manuscript. LL and

JX created the DCNN model and analyzed the data. YW and QM

collected the TCT dataset and performed pathological analysis. LT

and YW were involved in project development and critically reviewed

the manuscript. All authors read and approved the final

manuscript.

Ethics approval and consent to

participate

The present study was approved by the Ethics

Committee of the Army Military Medical University (Chongqing,

China; approval no. KY201774). The study was explained to all the

patients, and oral informed consent was obtained from them for

their sample to be used for scientific research by phone when

images were collected. Written patient consent was not required,

according to the guidance of the ethics committee.

Patient consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing

interests.

References

|

1

|

Small W Jr, Bacon MA, Bajaj A, Chuang LT,

Fisher BJ, Harkenrider MM, Jhingran A, Kitchener HC, Mileshkin LR,

Viswanathan AN and Gaffney DK: Cervical cancer: A global health

crisis. Cancer. 123:2404–2412. 2017. View Article : Google Scholar : PubMed/NCBI

|

|

2

|

Bray F, Ferlay J, Soerjomataram I, Siegel

RL, Torre LA and Jemal A: Global cancer statistics 2018: GLOBOCAN

estimates of incidence and mortality worldwide for 36 cancers in

185 countries. CA Cancer J Clin. 68:394–424. 2018. View Article : Google Scholar : PubMed/NCBI

|

|

3

|

Globocan, . 2018.China factsheet.

July

18–2020

|

|

4

|

Cheng WQ, Zheng QS, Zhang SW, Zeng HM, Zuo

TT, Jia MM, Xia CF, Zhou XN and He J: Analysis of incidence and

death of malignant tumors in China in 2012. China Cancer. 25:1–8.

2016.

|

|

5

|

Chacho MS, Mattie ME and Schwartz PE:

Cytohistologic correlation rates between conventional Papanicolaou

smears and ThinPrep cervical cytology: A comparison. Cancer.

99:135–140. 2003. View Article : Google Scholar : PubMed/NCBI

|

|

6

|

Behtash N and Mehrdad N: Cervical cancer:

screening and prevention. Asian Pac J Cancer Prev. 7:683–686.

2006.PubMed/NCBI

|

|

7

|

Ritu N and Wilbur D: The Bethesda System

for reporting cervical cytology: Definitions, criteria, and

explanatory notes. Springer International Publishing; Berlin: 2015,

PubMed/NCBI

|

|

8

|

Xie Y, Zhang J, Xia Y and Shen C: A mutual

bootstrapping model for automated skin lesion segmentation and

classification. IEEE Trans Med Imaging. 39:2482–2493. 2020.

View Article : Google Scholar : PubMed/NCBI

|

|

9

|

Gehlot S, Gupta A and Gupta R:

SDCT-AuxNet°: DCT augmented stain deconvolutional CNN with

auxiliary classifier for cancer diagnosis. Med Image Anal.

61:1016612020. View Article : Google Scholar : PubMed/NCBI

|

|

10

|

Murugan A, Nair SAH and Kumar KPS:

Detection of skin cancer using SVM, random forest and kNN

classifiers. J Med Syst. 43:2692019. View Article : Google Scholar : PubMed/NCBI

|

|

11

|

Abdulkerim C, Abdülkadir A, Aslı Ü,

Nurullah Ç, Gökhan B, Ilknur T, Aslı Ç, Behçet UT and Lütfiye DA:

Segmentation of precursor lesions in cervical cancer using

convolutional neural networks. 25th Signal Processing

and Communications Applications Conference (SIU). 2017.

|

|

12

|

Chankong T, Theera-Umpon N and

Auephanwiriyakul S: Automatic cervical cell segmentation and

classification in Pap smears. Comput Methods Programs Biomed.

113:539–556. 2014. View Article : Google Scholar : PubMed/NCBI

|

|

13

|

Zhang L, Lu L, Nogues I, Summers RM, Liu S

and Yao J: DeepPap: Deep convolutional networks for cervical cell

classification. IEEE J Biomed Health Inform. 21:1633–1643. 2017.

View Article : Google Scholar : PubMed/NCBI

|

|

14

|

Wu M, Yan C, Liu H, Liu Q and Yin Y:

Automatic classification of cervical cancer from cytological images

by using convolutional neural network. Biosci Rep.

38:BSR201817692018. View Article : Google Scholar : PubMed/NCBI

|

|

15

|

Tian Y, Yang L, Wang W, Zhang J, Tang Q,

Ji M, Yu Y, Li Y, Yang H and Qian A: Computer-aided detection of

squamous carcinoma of the cervix in whole slide images. arXiv

1905.10959. 2019.

|

|

16

|

Liu L, Wang Y, Wu D, Zhai Y, Tan L and

Xiao J: Multi-task learning for pathomorphology recognition of

squamous intraepithelial lesion in Thinprep cytologic test. ISICDM.

10:73–77. 2018.

|

|

17

|

Miyagi Y, Takehara K, Nagayasu Y and

Miyake T: Application of deep learning to the classification of

uterine cervical squamous epithelial lesion from colposcopy images

combined with HPV types. Oncol Lett. 19:1602–1610. 2020.PubMed/NCBI

|

|

18

|

Weiss S, Xu ZZ, Shyamal P, Amir A,

Bittinger K, Gonzalez A, Lozupone C, Zaneveld JR, Vázquez-Baeza Y,

Birmingham A, et al: Normalization and microbial differential

abundance strategies depend upon data characteristics. Microbiome.

5:272017. View Article : Google Scholar : PubMed/NCBI

|

|

19

|

Simonyan K and Zisserman A: Very deep

convolutional networks for large-scale image recognition. arXiv:

1409.1556. 2014.

|

|

20

|

Saikia AR, Bora K, Mahanta LB and Das AK:

Comparative assessment of CNN architectures for classification of

breast FNAC images. Tissue Cell. 57:8–14. 2019. View Article : Google Scholar : PubMed/NCBI

|

|

21

|

Sharma S and Mehra R: Conventional machine

learning and deep learning approach for multi-classification of

breast cancer histopathology images-a comparative insight. J Digit

Imaging. 33:632–654. 2020. View Article : Google Scholar : PubMed/NCBI

|

|

22

|

Pan SJ and Yang Q: A survey on transfer

learning. IEEE Trans Knowledge Data Eng. 10:1345–1359. 2010.

View Article : Google Scholar

|

|

23

|

Deng J, Dong W, Socher R, Li LJ, Li K and

Li FF: ImageNet: A large-scale hierarchical image database. IEEE

Conference on Computer Vision and Pattern Recognition. 248–255.

2009.

|

|

24

|

Takase T, Oyama S and Kurihara M:

Effective neural network training with adaptive learning rate based

on training loss. Neural Netw. 101:68–78. 2018. View Article : Google Scholar : PubMed/NCBI

|

|

25

|

Mo Z, Wang Q, Tian S, Yan R, Geng J, Yao Z

and Lu Q: Evaluating treatment via flexibility of dynamic MRI

community structures in depression. J Southeast Univ. 33:273–276.

2017.

|

|

26

|

Hyvärinen A and Oja E: Independent

component analysis: Algorithms and applications. Neural Netw.

13:411–430. 2000. View Article : Google Scholar : PubMed/NCBI

|

|

27

|

Taqi AM, Awad A, Al-Azzo F and Milanova M:

The impact of multi-optimizers and data augmentation on TensorFlow

convolutional neural network performance. IEEE Conference on

Multimedia Information Processing Retrieval. 140–145. 2018.

|