Introduction

Primary central nervous system lymphoma (PCNSL) is

recognized as a distinct category of lymphoma, accounting for ~4%

of all brain tumors in the United States (1). In comparison with different lymphoma

types, the prognosis for PCNSL is considerably worse, with the 5-

and 10-year relative survival rates are 35.2 and 27.5%,

respectively (2–4). Furthermore, recent epidemiological

data showed a significant rise in PCNSL incidence, reaching 0.4

cases per 100,000 population in 2022 (4), partly due to the aging global

population (2,5). Therefore, several deep learning

methodologies, enhanced by computer technology, have been utilized

in the diagnosis and treatment of numerous cancers, including brain

tumors, to transform cancer care (6–8).

Despite considerable progress derived from a deeper comprehension

of the disease regarding treatment, challenges remain in the

management of PCNSL. Only a limited number of studies have used

advanced methodologies in their investigation, including our

previous research contributions (9–12).

This shortage largely stems from the relatively infrequent

occurrence of PCNSL, a constrained patient demographic and the high

mortality rate associated with PCNSL when compared with other

lymphoma types (4,5). Therefore, the present study aimed to

contribute meaningfully to tackling the aforementioned

challenges.

Magnetic resonance imaging (MRI) using

gadolinium-based contrast agents has been used for the detection of

PCNSL, serving as the most sensitive imaging modality within

radiographic evaluations. Accurate assessment of lesions using

gadolinium-enhanced MRI is essential for planning therapy,

monitoring and determining prognosis in PCNSL (13–15),

similar to glioma and meningioma. Studies concerning brain tumors,

such as glioma and meningioma, have reported that deep learning

techniques leveraging MRI can cohesively integrate processes such

as medical image fusion, segmentation, feature extraction and

classification to effectively classify and segment tumors with

marked reproducibility (16,17).

Advancements in computer-aided medicine have notably

improved the detection and segmentation of brain tumors using deep

learning techniques, facilitating their transition into broader

clinical practice (18–20). The implementation of deep learning

models for automated tumor detection and segmentation has emerged

as a transformative approach, presenting opportunities for

integration into long-term treatment monitoring. Nonetheless,

achieving precise segmentation of brain lymphoma remains a clinical

challenge due to the intricate anatomical features of the tumor and

the overlap between the tumor margins and normal brain tissue

(21). Moreover, previous findings

indicate that standard 2-dimentional (2D) measurements that have

traditionally been used to assess tumor size and progression, are

not as dependable or accurate as volumetric assessments. Whilst 2D

measurements rely on simplified metrics, such as maximum diameter

or cross-sectional area, they often fail to capture the complex

3-dimensional (3D) morphology of brain tumors, leading to potential

inaccuracies in clinical evaluation and treatment planning

(22). Manual segmentation for

evaluating brain tumor volumes is labor-intensive and highly

susceptible to variability among different raters, resulting in

inconsistent interpretations among imaging professionals (22,23).

Such inconsistencies can severely affect subsequent evaluations of

the disease, treatment choices and monitoring efforts, ultimately

impacting the survival and prognosis of patients with PCNSL. Thus,

there is a growing need for a segmentation approach that can

effectively address the challenges associated with 2D measurements

and manual volume assessments. The application of computer-assisted

automatic segmentation is regarded as a promising solution, and

deep learning enables automated lesion segmentation, reduces

variability, enhances throughput and improves the detection of

complex lesions.

Currently, deep learning-based approaches dominate

the field of medical image segmentation, with U-Net and its

variants (including U-Net++, Attention U-Net and 3D U-Net) serving

as landmark technologies. These architectures have demonstrated a

notable performance in several segmentation tasks, particularly in

brain tumors, lung cancer and breast cancer imaging (24). However, despite their clinical

potential, these advanced techniques have not been systematically

applied to PCNSL segmentation, to the best of our knowledge. Our

previous research did not explore the potential of nnU-Net. The

limited number of PCNSL cases and the available imaging data have

resulted in only a few studies focusing on deep learning models for

PCNSL, largely concentrating on differentiating it from other brain

tumors, such as glioblastoma (9,10).

Moreover, there has been a scarcity of recent investigations

applying deep learning for the automatic segmentation of

multi-parameter MRI in PCNSL (11).

As several studies have reported the effectiveness of automatic

segmentation models based on deep learning for specific brain

tumors, such as glioma and meningioma, with notable rates of

automated detection and precision across several tumor compartments

(22,25,26),

we hypothesize that these deep learning models could also attain a

certain level of accuracy in recognizing and segmenting PCNSL.

Consequently, the aim of the present study was to create automated

deep learning segmentation models that leverage multi-sequence MRI

images as input to reliably detect PCNSL tumors. This method would

enable the quantitative calculation of volumes and assess the

accuracy and consistency of the outcomes. To address the

challenges, the present study used the nnU-Net deep learning

framework (27), known for its

versatility and effectiveness with limited sample sizes, without

requiring manual parameter tuning (28,29).

Materials and methods

Study population

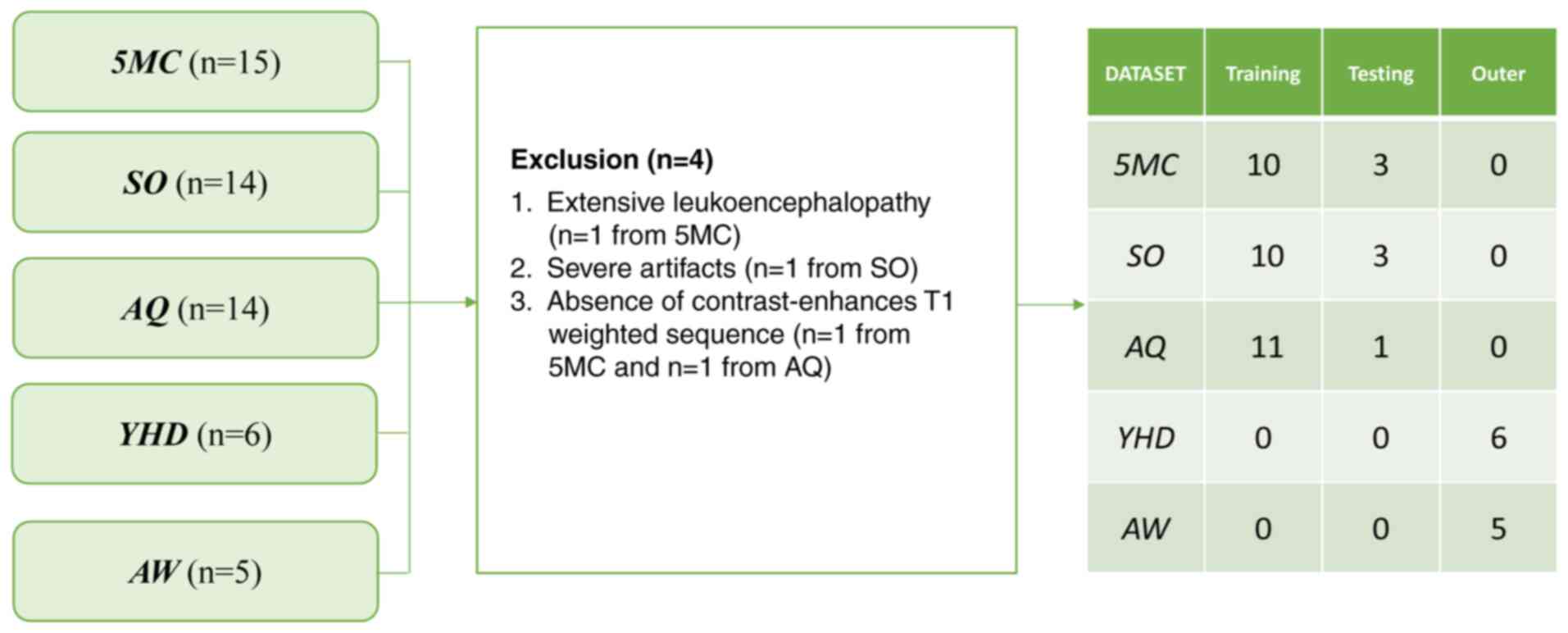

The present retrospective study enrolled patients

from the Fifth Medical Center of Chinese PLA General Hospital

(Beijing, China), Shengli Oilfield Central Hospital (Dongying,

China), Affiliated Hospital of Qingdao University (Qingdao, China),

Yantai Yuhuangding Hospital (Yantai, China) and Weifang People's

Hospital (Weifang, China). The study was performed according to the

principles of the Helsinki Declaration. To ensure consistent

ethical standards across all participating institutions, the five

hospitals adopted an ethical collaborative review mechanism (lead

institution review with participating institution endorsement). The

relevant approval documents were provided by Shengli Oilfield

Central Hospital and endorsed by the ethics committees of the other

four participating institutions (approval no. YX11202401101; March

12, 2024). The ethics committees of the participating hospitals

waived the requirement for patient informed consent.

Patients who underwent MRI scans and were initially

pathologically diagnosed with PCNSL between September 2016 and

March 2022 were recruited. All 53 PCNSL cases identified for the

present study were evaluated for the predetermined exclusion and

inclusion criteria. These criteria were applied consistently to all

patients, regardless of initial or follow-up imaging. The inclusion

criteria were as follows: i) Confirmed PCNSL diagnosis by

pathology; ii) received treatment at the designated 5 hospitals

between September 2016 and March 2022; and iii) completed

in-hospital MRI examinations. The exclusion criteria were as

follows: i) Extensive leukoencephalopathy (Fazekas III; n=1)

(30); ii) severe artifacts (n=1);

and iii) absence of an available complete MRI dataset (n=2). The

exclusion of patients based on these confounding images was

essential for ensuring the accurate representation of tumor

morphology and minimizing feature extraction bias during model

training. Finally, the cohort consisted of 49 PCNSL who were

successfully assessed (Fig. 1).

Image acquisition

All examinations were performed using two MRI

scanners: 3T scanner (Siemens Healthineers) and 3T scanner Signa

(GE Healthcare), adhered to the standards outlined in the Expert

Consensus on MRI Examination Techniques. Contrast-enhanced

T1-weighted (T1W) and T2-weighted (T2W) images were collected.

Gadopentetate dimeglumine was administered intravenously at doses

based on the body weight (0.1 mmol/kg). The field of view was set

to 256 mm, the matrix was 512×512 and the slice thickness was 5 mm.

The images were reconstructed with a standard kernel and then were

transferred to an external workstation (syngo MMWP VE 36A; Siemens

Healthineers) for further postprocessing.

Image pre-processing

The images were reviewed by a radiologist from

Shengli Oilfield Central Hospital with >9 years of experience in

radiology, who manually delineated the tumors at the axial site

using the Medical Imaging Interaction Toolkit, version 2018.04.2

(www.mitk.org). The marked regions of interest

were confirmed as ground truth by another senior neuroradiologist

(with 11 years of experience in radiology) from the Fifth Medical

Center of Chinese PLA General Hospital, who was blinded to the

assessment.

Image segmentation

A total of two segmentation models were established

using T1C and T2 scans, respectively. The obtained PCNSL dataset

was divided into a training dataset (n=30), a testing dataset (n=8)

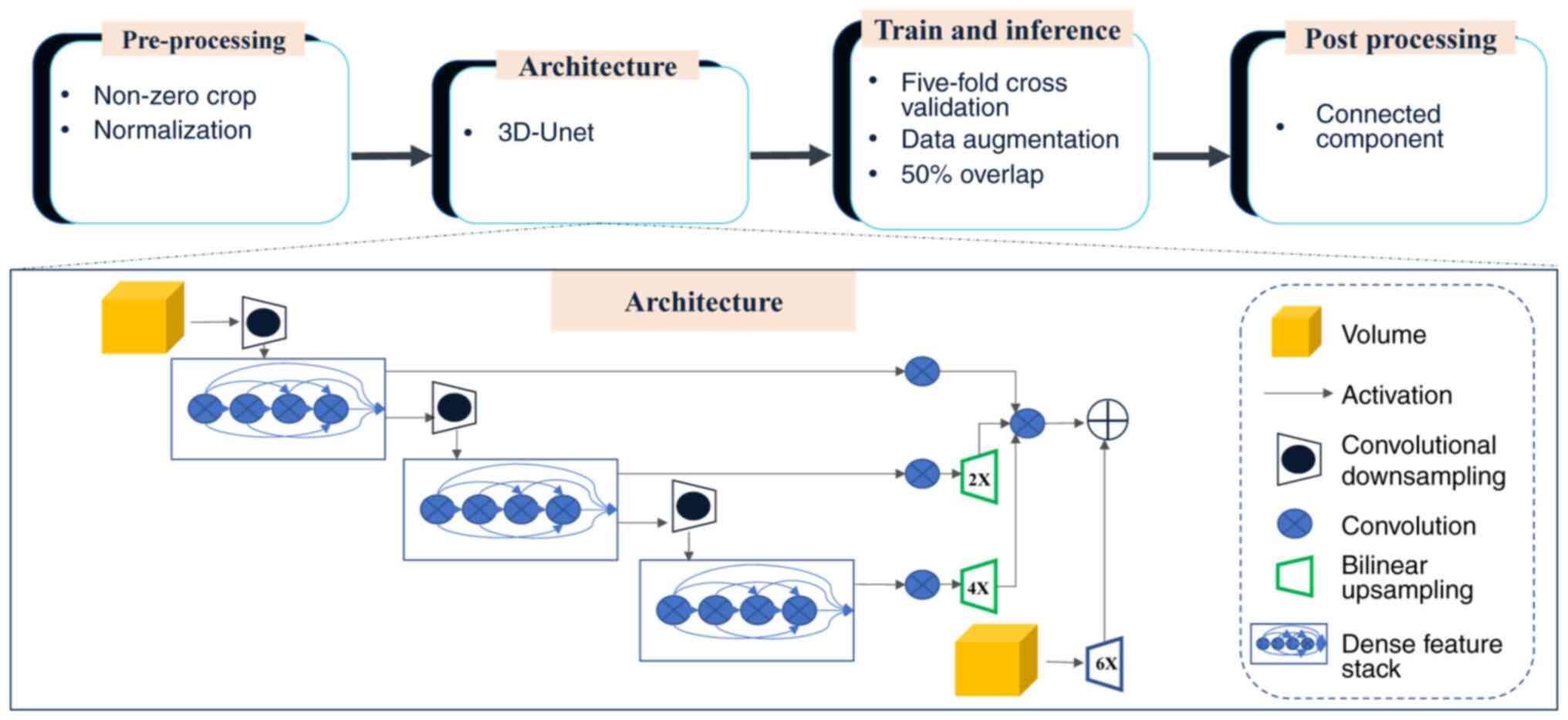

and an outer validation dataset (n=11). Subsequently, the nnU-Net

deep learning network was utilized to train an automated

segmentation model (27). nnU-Net

framework is an adaptive extension of the conventional U-Net

architecture. This innovative framework incorporates automated

adaptation mechanisms to accommodate diverse datasets and clinical

applications, thereby minimizing manual parameter optimization

requirements. The core design philosophy of the framework minimizes

unnecessary architectural complexity whilst optimizing essential

elements. This approach ensures reliable performance across diverse

medical imaging applications while preserving clinical relevance

(31). By leveraging nnU-Net, the

present study streamlined the key decisions involved in designing

an effective segmentation pipeline for the specific dataset. This

process is illustrated in Fig.

2.

The default framework of nnU-Net was used; however,

it was adapted for the characteristics of the data, choosing the 3D

fullres model and retaining the original resolution. Preprocessing

and data augmentation steps were used to improve classification

accuracy before training. To minimize the computational burden and

reduce the matrix size, the scans were cropped to the region with

non-zero values. The datasets were then resampled to median voxel

spacing by utilizing third-order spline interpolation for images

and neighbor interpolation for masks. The resampling approach

enabled the neural networks to improve the learning of the spatial

semantics of the scans. Finally, the entire dataset was normalized

by clipping to the (0.5, 99.5) percentile of these intensity

values, and the z-score was normalized according to the mean and

standard deviation of all collected intensity values in each

sample. In the training procedure, the 3D fullres model was used

and a combination of dice and cross-entropy loss was utilized

according to following formula: L=Lcrossentropy +

Ldice

The same data augmentation methods were performed to

prevent overfitting. The batch size was set adaptively according to

video memory. The number of training epochs was 500 at which epoch

the model converged. During the training process, 5-fold

cross-validation was utilized to optimize computational efficiency

and reduce deviation variance. Connected component analysis was

used as a postprocessing technique. After inference, the tumors

could be segmented automatically.

Statistical analysis

Categorical variables are presented as n (%), whilst

continuous variables are summarized as mean ± standard deviation

for normally distributed data, or as median (interquartile range)

for non-normally data. The Mann-Whitney U test was applied to

compare continuous clinical variables, whilst the χ2

test was used to assess the difference in categorical clinical

variables between groups. The Dice similarity coefficient (DSC),

which provides a measure of spatial overlap for each voxel of

segmented tissue, was used to evaluate the model performance.

Volumes of tumor components were calculated and volumetric

agreement was evaluated between the ground truth and automated

segmentations using the Wilcoxon rank-sum test. Pearson's

correlation coefficient (r) was calculated and a Bland-Altman

analysis was performed. P<0.05 was considered to indicate at

statistically significant difference. All statistical analyses were

performed using Python (version 3.5.6; Python Software

Foundation).

Results

Study population

In the present study, the PCNSL cohort comprised 49

patients (27 men and 22 women) with a mean age of 58.4±9.1 years.

The detailed patient demographics of the training and validation

sets are presented in Table I. The

diagnosis of PCNSL was confirmed through stereotactic biopsy in 40

of the cases and through surgical specimens in 9 of the cases. In

addition, systemic lymphatic disease was ruled out by additional

imaging and bone marrow biopsy in all cases. The majority of the

patients received chemotherapy (91.8%). A total of 93.8% of the

PCNSLs were located in the supratentorial region and showed

multifocal tumor spread (71.4%).

| Table I.Demographic and clinical

characteristics of all patients with primary central nervous system

lymphoma in the present study (n=49). |

Table I.

Demographic and clinical

characteristics of all patients with primary central nervous system

lymphoma in the present study (n=49).

| Characteristic | Value |

|---|

| Sex |

|

|

Male | 27 (55.1) |

|

Female | 22 (44.9) |

| Age, years | 58.4±9.1 |

| Tumor location |

|

|

Telencephalon | 37 (75.5) |

|

Thalamus | 1 (2.0) |

|

Brainstem | 5 (10.2) |

|

Cerebellum | 6 (12.2) |

| History of

malignancy |

|

| No | 47 (95.9) |

|

Yes | 2 (4.1) |

Evaluation of the segmentation

model

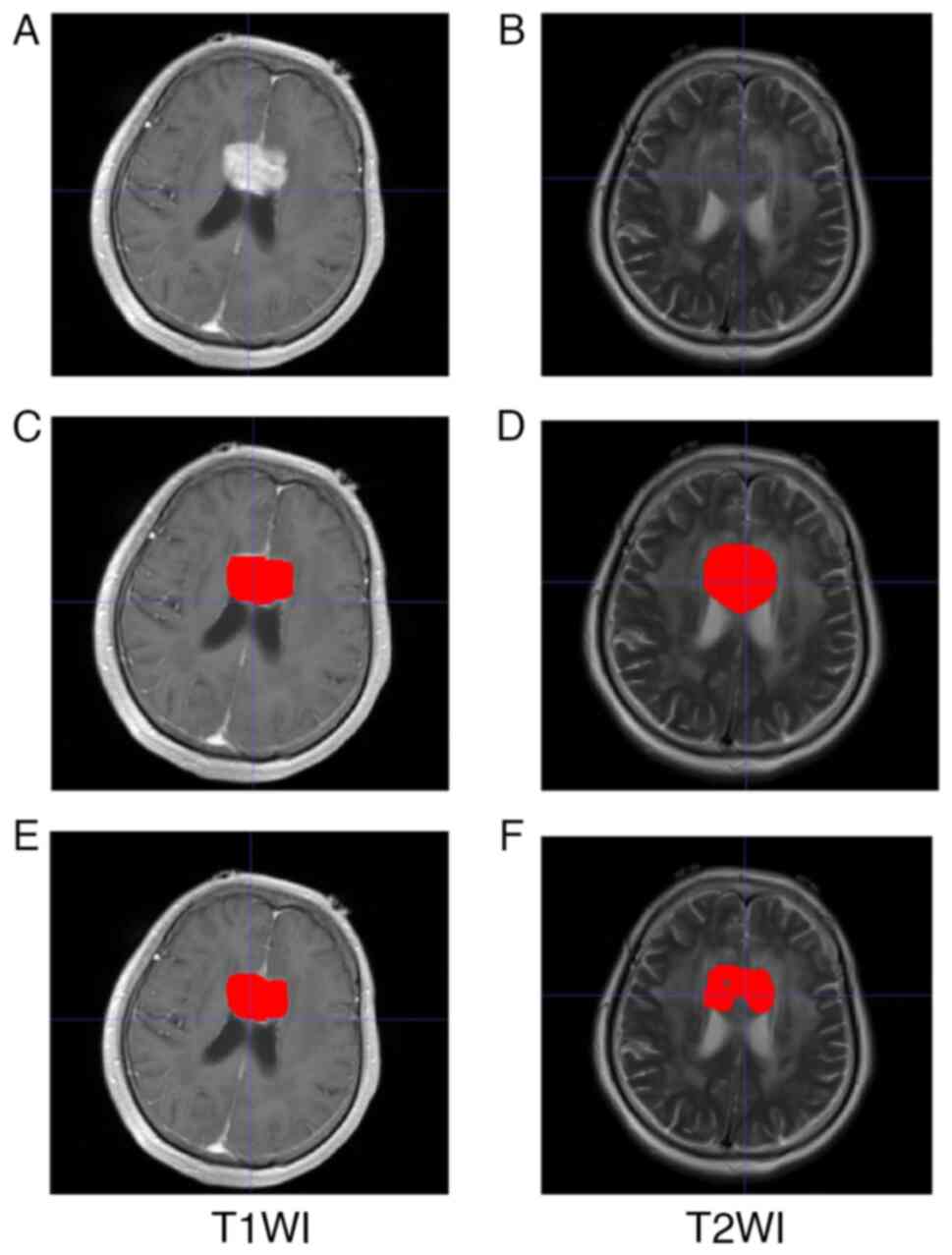

The performance results for the segmentation models

are outlined in Table II. Compared

with the ground truth, the T1WI sequences segmentation model

achieved the optimal performance with a training dice of 0.923, a

testing dice of 0.830 and an outer validation dice of 0.801. The

T1WI sequences model and the T2WI sequences model yielded a mean

tumor volume of 15.64±14.26 and 25.77 ± 24.20 cm3,

respectively. Representative images of two different automated

segmentation examples from a 57-year-old female patient are shown

in Fig. 3.

| Table II.Correlation coefficients, dice

coefficients and the significant differences in tumor volume

between the tumor volumes of the training, testing and outer

datasets. |

Table II.

Correlation coefficients, dice

coefficients and the significant differences in tumor volume

between the tumor volumes of the training, testing and outer

datasets.

|

| Correlation

coefficient, r | Dice

coefficient | P-value |

|---|

|

|

|

|

|

|---|

| Dataset | T1WI | T2WI | T1WI | T2WI | T1WI | T2WI |

|---|

| Training | 0.76a | 0.89a | 0.923 | 0.761 | 0.329 | 0.229 |

| Testing | 0.98a | 0.66a | 0.830 | 0.647 | 0.093 | 0.779 |

| Outer | 0.95a | 0.98a | 0.801 | 0.643 | 0.013 | 0.477 |

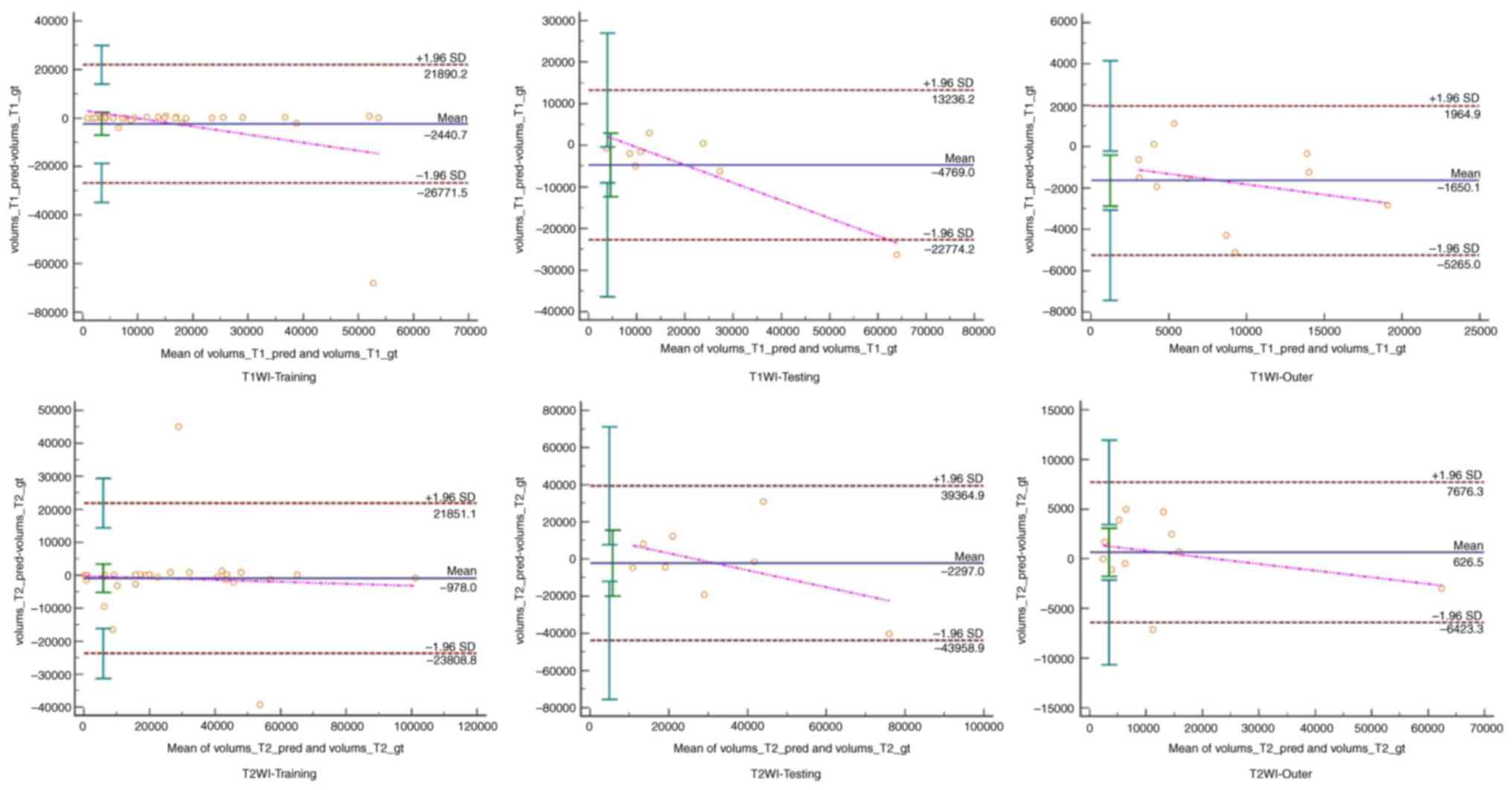

To compare volumetric assessment by automated

segmentations with the ground truth, regression lines for two

segmentation models were drawn (Fig.

4). The correlation coefficients for the three models are

presented in Table II, which also

demonstrates the significant differences in tumor volume between

the training, testing and outer datasets. Furthermore, Bland-Altman

analysis revealed a good agreement between the manual and automated

segmentations (Fig. 5).

Discussion

Precise assessment of size is clinically relevant

for therapy planning, prognosis and monitoring in PCNSL. Automated

segmentation of PCNSL may enable a precise and objective assessment

of tumor burden and response in longitudinal imaging, which is

often difficult to determine due to its multifaceted appearance of

multiple scattered lesions (22,23).

In the present study, two automated segmentation models of PCNSL

were established using T1C and T2 scans. The nature of PCNSL tumors

generally results in a complex and heterogeneous structure on

multiple MRI image sequences. However, the present study observed

that the automatic segmentation model demonstrated marked accuracy

in its segmentation performance.

The nnU-net-based automated segmentation model in

the present study demonstrated a notable performance. Compared with

the ground truth, the T1WI sequences segmentation model achieved

the optimal performance with a training dice of 0.923, a testing

dice of 0.830 and an outer validation dice of 0.801. Bland-Altman

analysis revealed high agreement between manual and automated

segmentations. Furthermore, both models had strong correlation

coefficient and the automated segmentation architecture was

successfully deployed across diverse input data types with ease.

Therefore, the deep learning model proposed in the present study is

highly clinically relevant as it allows for the automated detection

of cerebral masses. This can enable the preselection of lesions and

serve as a control mechanism for radiologists and treating

physicians.

In comparison with the previously suggested models,

the deep learning model in the present study demonstrated

superiority. In prior studies, several methods were proposed for

glioma, another type of central nervous system tumor, due to

similar imaging features and larger glioma datasets. Perkuhn et

al (32) reported DSCs ranging

from 0.62–0.86 for different tumor components of gliomas. This

trained segmentation model for gliomas could be successfully

applied to PCNSL without any decrease in segmentation performance,

despite the different appearance and overall complex structure of

PCNSL. Using a different convolutional neural network (CNN), Menze

et al (33) and Bousabarah

et al (34) calculated DSCs

of 0.74–0.85 and 0.84–0.90 for glioma segmentations, respectively.

Kickingereder et al (26)

reported DSCs of 0.89–0.93 for an artificial neural network trained

on a larger cohort of patients with glioblastoma. Pennig et

al (11) evaluated the efficacy

of a deep learning model initially trained on gliomas for fully

automated detection and segmentation of PCNSL on multiparametric

MRI including heterogeneous imaging data from several scanner

vendors and study centers. The median DSC was 0.76 for total tumor

volume (11).

CNNs require large annotated datasets for effective

training, as small datasets limit generalizability. This is

particularly challenging for lymphoma analysis, where variations in

lesion size, shape and metabolic activity complicate training

without reliable anatomical references. In terms of performance,

the present model achieved notable results using only a small

sample dataset compared with the previous study (35,36).

The T1WI model achieved an improved performance in comparison with

the T2WI, and this can be attributed to the improved visualization

of tumor morphology on contrast-enhanced T1WI, where tumors

typically exhibit distinct homogeneous enhancement patterns

(37). Moreover, peritumoral edema

appears hyperintense on T2WI, which reflects the infiltration and

dissemination of tumor cells along the perivascular space, and is

easily confused with the slightly hyperintense signal of the tumor

(37). The lower T2WI performance

was mainly related to imaging features, rather than model

architecture or training bias. Other studies have also reported a

similar phenomenon (10,38,39).

Therefore, T2WI performance may be improved in the following ways:

i) Data enhancement: Improve T2WI data quality through enhancement

technology; ii) multimodal fusion: Combine T1WI and T2WI data to

improve the segmentation effect; and iii) model optimization:

Incorporate a boundary sensing module into the segmentation network

to improve the extraction of target boundary features and refine

segmentation precision.

The main limitations of the present study include

the small sample size and the inherent constraints associated with

its retrospective design. Thus, further investigation is needed to

determine whether automatic segmentation for PCNSL is suitable for

specific clinical tasks and parameters. Despite evaluating the

automatic segmentation model using several MRI multi-sequence

images from multiple hospital treatment centers, it is essential to

acknowledge the potential bias associated with these images. It is

also acknowledged that the MRI techniques and learning methods used

in the present study could be further optimized. However, at

present, acquiring a substantial volume of MRI data from additional

patients with PCNSL for external validation remains challenging due

to the rarity of this condition. If more PCNSL imaging data become

accessible in the future, the performance of the model in the

present study could be further optimized. In subsequent studies,

the focus should be on compiling a more comprehensive and

higher-quality dataset of medical imaging data for PCNSL, refining

the methodology and validating the results in larger, multi-center

cohorts to improving the robustness of the model and enhance its

potential clinical applicability. The development of brain tumor

segmentation methods that can address the issue of missing

modalities should also be investigated. Furthermore, data on the

prognosis of PCNSL should be collected and the method to form a set

of full-process automated diagnosis and treatment solutions for

PCNSL should be improved. Although the present research is still in

the initial stage, the precise quantification of the lesions will

lay a solid foundation for this goal. Furthermore, future studies

should perform analyses to explore potential correlations between

the artificial intelligence-generated findings (such as tumor

segmentation results and predictive scores) and key clinical

outcomes, such as treatment response, progression-free survival or

overall survival, where data is available. These analyses should

aim to establish a stronger link between artificial intelligence

findings and clinical outcomes, thereby enhancing the translational

value and practical applicability of the model in the present

study.

In conclusion, the present study established two

automated segmentation models of PCNSL using T1C and T2. Compared

with the ground truth, the T1C sequences segmentation model

achieved optimal performance. The proposed automatic segmentation

model performed well despite the complex and multifaceted

appearance of PCNSL. This indicates its great potential for use in

the entire follow-up monitoring process for this lymphoma

subtype.

Acknowledgements

The authors would like to thank Dr Lixi Miao

(Department of Medical Imaging Center, Shengli Oilfield Central

Hospital) and Dr Ruyi Tian (Department of Radiology, Fourth Medical

Center Chinese PLA General Hospital, Beijing, P.R. China) for

assessing the images. Their evaluations provided the essential data

foundation for establishing the segmentation model. As doctors from

the hospitals that supplied the imaging data, they voluntarily

participated in the MRI evaluations but were not involved in any

other aspects of this study. In accordance with their wishes and

academic standards, they have not been listed as co-authors of this

manuscript.

Funding

The present project was funded by the Natural Science Foundation

of Dongying, China (grant no. 2023ZR028).

Availability of data and materials

The data generated in the present study may be

requested from the corresponding author.

Authors' contributions

TW, XT, JD, and YJ participated in study design and

manuscript conceptualization. XT, JD, and YJ participated in study

design and manuscript conceptualization. TW developed the model and

performed the statistical analysis. GL and XT drafted the work. XT

revised the manuscript critically for important intellectual

content, provided a discussion of the results and an explanation of

the main nnU-Net techniques. JD and YJ submitted ethics

applications to five affiliated hospitals; recruited radiologists

for manual segmentation of imaging data and coordinated with

co-authors to distribute workloads. JD and YJ confirm the

authenticity of all the raw data. GL and WM contributed to the

conception of the work and oversaw project progress while ensuring

workflow execution. All authors read and approved the final

manuscript.

Ethics approval and consent to

participate

The present retrospective study was performed in

compliance with the Helsinki Declaration and was approved by the

Independent Ethics Committees of the Fifth Medical Center of

Chinese PLA General Hospital, the Affiliated Hospital of Qingdao

University, Yantai Yuhuangding Hospital, Weifang People's Hospital

and Shengli Oilfield Central Hospital (March 12, 2024; approval no.

YX11202401101). The ethics committees waived the requirement for

obtaining informed consent from patients.

Patient consent for publication

The patient provided written informed consent for

the publication of any data and/or accompanying images.

Competing interests

The authors declare that they have no competing

interests.

References

|

1

|

Villano JL, Shaikh H, Dolecek TA and

McCarthy BJ: Age, gender, and racial differences in incidence and

survival in primary CNS lymphoma. Br J Cancer. 105:1414–1418. 2011.

View Article : Google Scholar : PubMed/NCBI

|

|

2

|

Chukwueke U, Grommes C and Nayak L:

Primary central nervous system lymphomas. Hematol Oncol Clin North

Am. 36:147–159. 2022. View Article : Google Scholar : PubMed/NCBI

|

|

3

|

Morales-Martinez A, Nichelli L,

Hernandez-Verdin I, Houillier C, Alentorn A and Hoang-Xuan K:

Prognostic factors in primary central nervous system lymphoma. Curr

Opin Oncol. 34:676–684. 2022. View Article : Google Scholar : PubMed/NCBI

|

|

4

|

Schaff LR and Grommes C: Primary central

nervous system lymphoma. Blood. 140:971–979. 2022. View Article : Google Scholar : PubMed/NCBI

|

|

5

|

Lukas RV, Stupp R, Gondi V and Raizer JJ:

Primary central nervous system Lymphoma-PART 1: Epidemiology,

diagnosis, staging, and prognosis. Oncology (Williston Park).

32:17–22. 2018.PubMed/NCBI

|

|

6

|

Sangeetha SKB, Muthukumaran V, Deeba K,

Rajadurai H, Maheshwari V and Dalu GT: Multiconvolutional transfer

learning for 3D brain tumor magnetic resonance images. Comput

Intell Neurosci. 2022:87224762022. View Article : Google Scholar : PubMed/NCBI

|

|

7

|

Sadad T, Rehman A, Munir A, Saba T, Tariq

U, Ayesha N and Abbasi R: Brain tumor detection and

multi-classification using advanced deep learning techniques.

Microsc Res Tech. 84:1296–1308. 2021. View Article : Google Scholar : PubMed/NCBI

|

|

8

|

Abd-Ellah MK, Awad AI, Khalaf AAM and

Hamed HFA: A review on brain tumor diagnosis from MRI images:

Practical implications, key achievements, and lessons learned. Magn

Reson Imaging. 61:300–318. 2019. View Article : Google Scholar : PubMed/NCBI

|

|

9

|

Lu G, Zhang Y, Wang W, Miao L and Mou W:

Machine learning and deep learning CT-based models for predicting

the primary central nervous system lymphoma and glioma types: A

multicenter retrospective study. Front Neurol. 13:9052272022.

View Article : Google Scholar : PubMed/NCBI

|

|

10

|

Xia W, Hu B, Li H, Shi W, Tang Y, Yu Y,

Geng C, Wu Q, Yang L, Yu Z, et al: Deep learning for automatic

differential diagnosis of primary central nervous system lymphoma

and glioblastoma: Multi-parametric magnetic resonance imaging based

convolutional neural network model. J Magn Reson Imaging.

54:880–887. 2021. View Article : Google Scholar : PubMed/NCBI

|

|

11

|

Pennig L, Hoyer UCI, Goertz L, Shahzad R,

Persigehl T, Thiele F, Perkuhn M, Ruge MI, Kabbasch C, Borggrefe J,

et al: Primary central nervous system lymphoma: Clinical evaluation

of automated segmentation on multiparametric MRI using deep

learning. J Magn Reson Imaging. 53:259–268. 2021. View Article : Google Scholar : PubMed/NCBI

|

|

12

|

Ramadan S, Radice T, Ismail A, Fiori S and

Tarella C: Advances in therapeutic strategies for primary CNS

B-cell lymphomas. Expert Rev Hematol. 15:295–304. 2022. View Article : Google Scholar : PubMed/NCBI

|

|

13

|

Batchelor TT: Primary central nervous

system lymphoma. Hematology Am Soc Hematol Educ Program.

2016:379–385. 2016. View Article : Google Scholar : PubMed/NCBI

|

|

14

|

Bonm AV, Ritterbusch R, Throckmorton P and

Graber JJ: Clinical imaging for diagnostic challenges in the

management of gliomas: A review. J Neuroimaging. 30:139–145. 2020.

View Article : Google Scholar : PubMed/NCBI

|

|

15

|

Huang RY, Bi WL, Griffith B, Kaufmann TJ,

la Fougère C, Schmidt NO, Tonn JC, Vogelbaum MA, Wen PY, Aldape K,

et al: Imaging and diagnostic advances for intracranial

meningiomas. Neuro Oncol. 21:i44–i61. 2019. View Article : Google Scholar : PubMed/NCBI

|

|

16

|

Yadav AS, Kumar S, Karetla GR,

Cotrina-Aliaga JC, Arias-Gonzáles JL, Kumar V, Srivastava S, Gupta

R, Ibrahim S, Paul R, et al: A feature extraction using

probabilistic neural network and BTFSC-Net model with deep learning

for brain tumor classification. J Imaging. 9:102022. View Article : Google Scholar : PubMed/NCBI

|

|

17

|

ZainEldin H, Gamel SA, El-Kenawy EM,

Alharbi AH, Khafaga DS, Ibrahim A and Talaat FM: Brain tumor

detection and classification using deep learning and Sine-cosine

fitness grey wolf optimization. Bioengineering (Basel). 10:182022.

View Article : Google Scholar : PubMed/NCBI

|

|

18

|

Taher F, Shoaib MR, Emara HM, Abdelwahab

KM, Abd El-Samie FE and Haweel MT: Efficient framework for brain

tumor detection using different deep learning techniques. Front

Public Health. 10:9596672022. View Article : Google Scholar : PubMed/NCBI

|

|

19

|

Hwang K, Park J, Kwon YJ, Cho SJ, Choi BS,

Kim J, Kim E, Jang J, Ahn KS, Kim S and Kim CY: Fully automated

segmentation models of supratentorial meningiomas assisted by

inclusion of normal brain images. J Imaging. 8:3272022. View Article : Google Scholar : PubMed/NCBI

|

|

20

|

Dang K, Vo T, Ngo L and Ha H: A deep

learning framework integrating MRI image preprocessing methods for

brain tumor segmentation and classification. IBRO Neurosci Rep.

13:523–532. 2022. View Article : Google Scholar : PubMed/NCBI

|

|

21

|

Lin YY, Guo WY, Lu CF, Peng SJ, Wu YT and

Lee CC: Application of artificial intelligence to stereotactic

radiosurgery for intracranial lesions: Detection, segmentation, and

outcome prediction. J Neurooncol. 161:441–450. 2023. View Article : Google Scholar : PubMed/NCBI

|

|

22

|

Laukamp KR, Pennig L, Thiele F, Reimer R,

Görtz L, Shakirin G, Zopfs D, Timmer M, Perkuhn M and Borggrefe J:

Automated meningioma segmentation in multiparametric MRI:

comparable effectiveness of a deep learning model and manual

segmentation. Clin Neuroradiol. 31:357–366. 2021. View Article : Google Scholar : PubMed/NCBI

|

|

23

|

Chang K, Beers AL, Bai HX, Brown JM, Ly

KI, Li X, Senders JT, Kavouridis VK, Boaro A, Su C, et al:

Automatic assessment of glioma burden: A deep learning algorithm

for fully automated volumetric and bidimensional measurement. Neuro

Oncol. 21:1412–1422. 2019. View Article : Google Scholar : PubMed/NCBI

|

|

24

|

Peng J, Luo H, Zhao G, Lin C, Yi X and

Chen S: A Review of medical image segmentation algorithms based on

deep learning. Computer Engineering Appl. 57:44–57. 2021.(In

Chinese).

|

|

25

|

Latif G: DeepTumor: Framework for brain MR

image classification, segmentation and tumor detection. Diagnostics

(Basel). 12:28882022. View Article : Google Scholar : PubMed/NCBI

|

|

26

|

Kickingereder P, Isensee F, Tursunova I,

Petersen J, Neuberger U, Bonekamp D, Brugnara G, Schell M, Kessler

T, Foltyn M, et al: Automated quantitative tumour response

assessment of MRI in neuro-oncology with artificial neural

networks: A multicentre, retrospective study. Lancet Oncol.

20:728–740. 2019. View Article : Google Scholar : PubMed/NCBI

|

|

27

|

Isensee F, Jaeger PF, Kohl SAA, Petersen J

and Maier-Hein KH: nnU-Net: A self-configuring method for deep

learning-based biomedical image segmentation. Nat Methods.

18:203–211. 2021. View Article : Google Scholar : PubMed/NCBI

|

|

28

|

Pemberton HG, Wu J, Kommers I, Müller DMJ,

Hu Y, Goodkin O, Vos SB, Bisdas S, Robe PA, Ardon H, et al:

Multi-class glioma segmentation on real-world data with missing MRI

sequences: Comparison of three deep learning algorithms. Sci Rep.

13:189112023. View Article : Google Scholar : PubMed/NCBI

|

|

29

|

Ganesan P, Feng R, Deb B, Tjong FVY,

Rogers AJ, Ruipérez-Campillo S, Somani S, Clopton P, Baykaner T,

Rodrigo M, et al: Novel domain Knowledge-encoding algorithm enables

Label-efficient deep learning for cardiac CT segmentation to guide

atrial fibrillation treatment in a pilot dataset. Diagnostics

(Basel). 14:15382024. View Article : Google Scholar : PubMed/NCBI

|

|

30

|

Fazekas F, Chawluk JB, Alavi A, Hurtig HI

and Zimmerman RA: MR signal abnormalities at 1.5 T in Alzheimer's

dementia and normal aging. AJR Am J Roentgenol. 149:351–356. 1987.

View Article : Google Scholar : PubMed/NCBI

|

|

31

|

Isensee F, Petersen J, Klein A, Zimmerer

D, Jaeger PF, Kohl S, Wasserthal J, Koehler G, Norajitra T, Wirkert

S and Maier-Hein KH: nnU-Net: Self-adapting framework for

U-Net-based medical image segmentation. ArXiv. abs/1809.10486.

2018.

|

|

32

|

Perkuhn M, Stavrinou P, Thiele F, Shakirin

G, Mohan M, Garmpis D, Kabbasch C and Borggrefe J: Clinical

evaluation of a multiparametric deep learning model for

glioblastoma segmentation using heterogeneous magnetic resonance

imaging data from clinical routine. Invest Radiol. 53:647–654.

2018. View Article : Google Scholar : PubMed/NCBI

|

|

33

|

Menze BH, Jakab A, Bauer S,

Kalpathy-Cramer J, Farahani K, Kirby J, Burren Y, Porz N, Slotboom

J, Wiest R, et al: The multimodal brain tumor image segmentation

benchmark (BRATS). IEEE Trans Med Imaging. 34:1993–2024. 2015.

View Article : Google Scholar : PubMed/NCBI

|

|

34

|

Bousabarah K, Letzen B, Tefera J, Savic L,

Schobert I, Schlachter T, Staib LH, Kocher M, Chapiro J and Lin M:

Automated detection and delineation of hepatocellular carcinoma on

multiphasic contrast-enhanced MRI using deep learning. Abdom Radiol

(NY). 46:216–225. 2021. View Article : Google Scholar : PubMed/NCBI

|

|

35

|

Naser PV, Maurer MC, Fischer M,

Karimian-Jazi K, Ben-Salah C, Bajwa AA, Jakobs M, Jungk C, Jesser

J, Bendszus M, et al: Deep learning aided preoperative diagnosis of

primary central nervous system lymphoma. iScience. 27:1090232024.

View Article : Google Scholar : PubMed/NCBI

|

|

36

|

Cassinelli Petersen GI, Shatalov J, Verma

T, Brim WR, Subramanian H, Brackett A, Bahar RC, Merkaj S, Zeevi T,

Staib LH, et al: Machine learning in differentiating gliomas from

primary CNS lymphomas: A systematic review, reporting quality, and

risk of bias assessment. AJNR Am J Neuroradiol. 43:526–533. 2022.

View Article : Google Scholar : PubMed/NCBI

|

|

37

|

Ferreri AJM, Calimeri T, Cwynarski K,

Dietrich J, Grommes C, Hoang-Xuan K, Hu LS, Illerhaus G, Nayak L,

Ponzoni M and Batchelor TT: Primary central nervous system

lymphoma. Nat Rev Dis Primers. 9:292023. View Article : Google Scholar : PubMed/NCBI

|

|

38

|

Gill CM, Loewenstern J, Rutland JW, Arib

H, Pain M, Umphlett M, Kinoshita Y, McBride RB, Bederson J, Donovan

M, et al: Peritumoral edema correlates with mutational burden in

meningiomas. Neuroradiology. 63:73–80. 2021. View Article : Google Scholar : PubMed/NCBI

|

|

39

|

Reszec J, Hermanowicz A, Rutkowski R,

Turek G, Mariak Z and Chyczewski L: Expression of MMP-9 and VEGF in

meningiomas and their correlation with peritumoral brain edema.

Biomed Res Int. 2015:6468532015. View Article : Google Scholar : PubMed/NCBI

|